#CloudNative#Microservices

The concept of cloud native is not new. But it has created a lot of hype recently. Essentially, cloud native is the concept building and running apps to leverage the distributed computing advantages that cloud delivery offers. Cloud native applications are created to benefit from the elasticity, scale, resiliency, and flexibility the cloud provides.

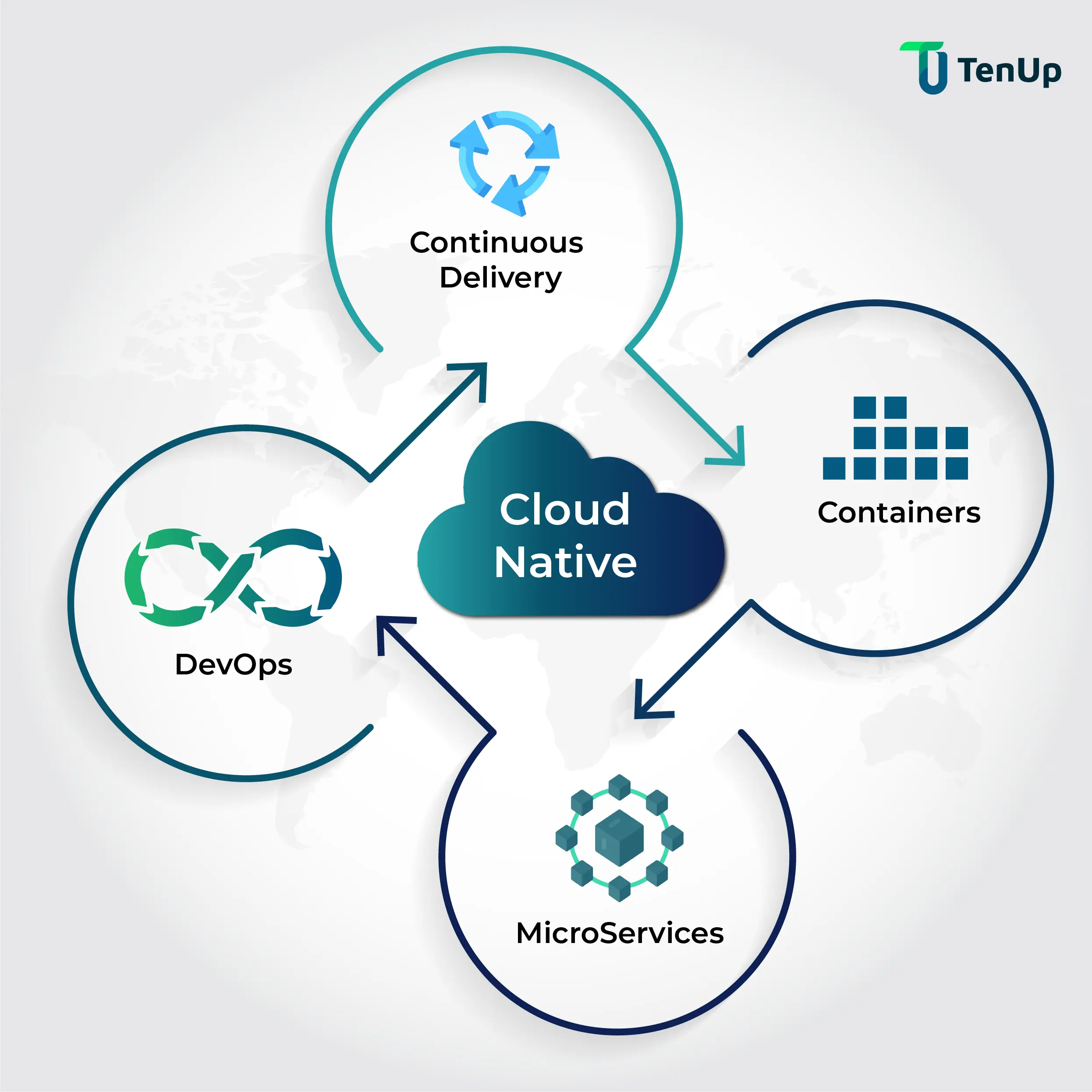

The Cloud Native Computing Foundation (CNCF) defines it as such: Cloud native technologies empower organisations to build and run scalable applications in public, private, and hybrid clouds.The modern ecosystem of complex applications demands continuous innovation combined with unprecedented responsiveness. As a result, business systems need to be more strategic and highly flexible. Cloud native helps businesses to be more agile and responsive to the rising customer expectations. It takes advantage of features like containers, service meshes, microservices, immutable infrastructure, and declarative APis. These features help build loosely coupled systems that are resilient, manageable and observable. As a result, engineers can make high-impact changes frequently with minimal effort.

Cloud native services use technologies such as serverless functions, Kubernetes, Docker, APIs and Service Mesh to deliver on this promise of flexibility, faster releases, reduced costs among others. Some of the leading cloud providers empower cloud tooling and services to help developers reduce operational activity and build applications faster. In short, cloud native helps agile teams with a comprehensive, standards based platform to build, deploy and manage cloud ative apps such as microservices and serverless functions.

Cloud native apps are independent services and packaged as lightweight, self-contained containers. They are portable and have high scalability(both in and out). Since everything is contained in the container such as Docker, it isolates the applications and its dependencies from the infrastructure. The obvious benefit of this is the containerized app can be deployed in any environment that has the runtime engine. An important point to note here is that Kubernetes container orchestrations manage the lifecycle of containers. Cloud native apps are delivered using a DevOps pipeline that uses continuous integration and continuous delivery toolchains.

The Cloud Native Computing Foundation (CNCF), a Linux Foundation project, was launched in 2015 to help promote container technology and its adoption. It was founded along with Kubernetes, an open source container cluster manager that was contributed by Google as a seed technology to the Linux Foundation. Since then CNCF has been the vendor-neutral home to many of the fastest growing open source projects like Kubernetes, Prometheus, and Envoy.

CNCF projects such as Kubernetes have seen rapid adoption and gained wide community support, leading to them becoming some of the highest velocity projects in open source history. CNCF community is home to the world’s best developers, users and vendors and hosts some of the largest open source developer conferences.

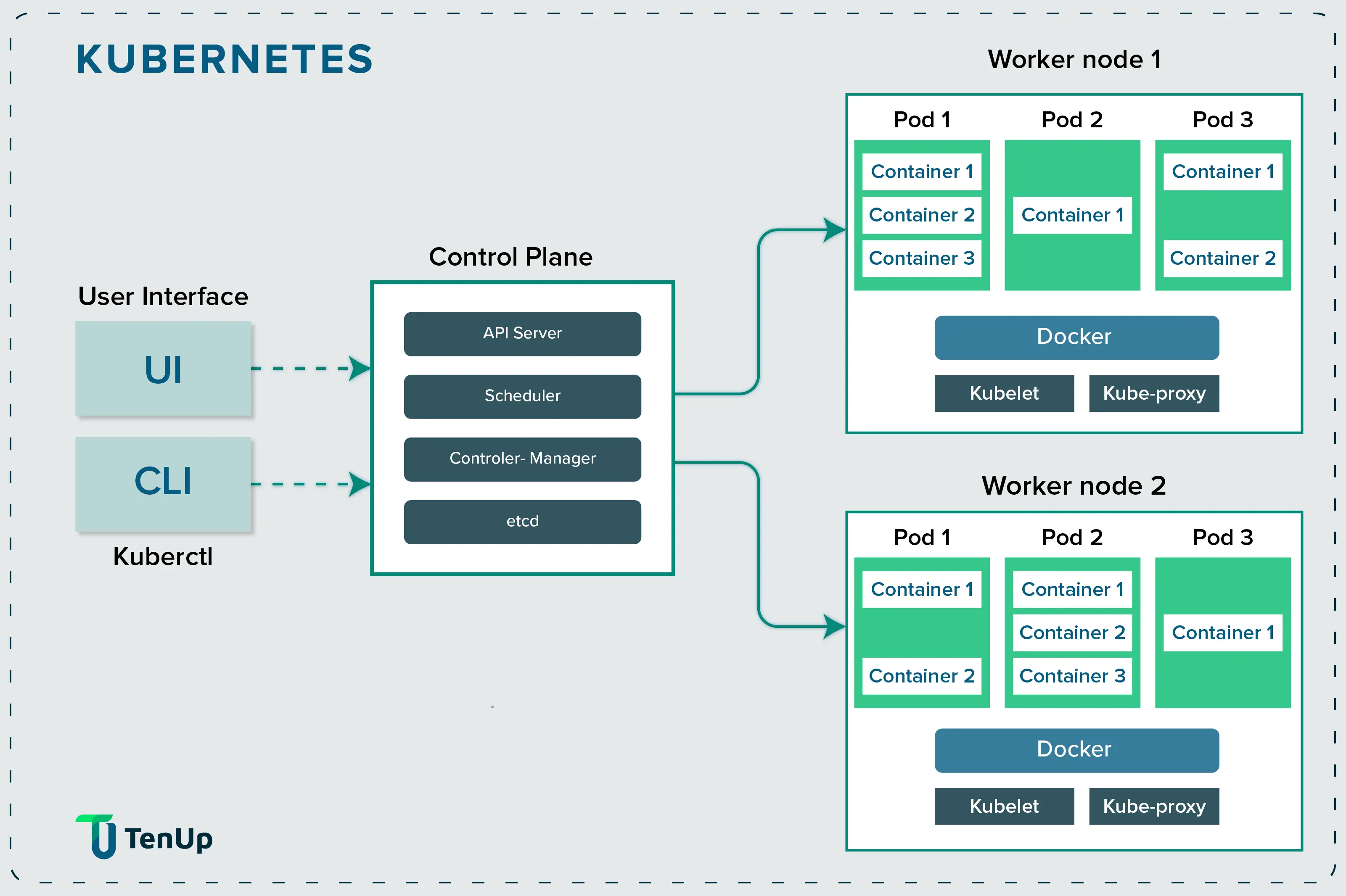

When you mention cloud native, it is difficult not to think of Kubernetes. This open source platform automates Linux container operations, eliminating much of the manual activity involved in deploying and scaling containerized applications. You can cluster together groups of hosts running Linux containers, and Kubernetes helps you manage these clusters.

Containerization is a super power when it comes to cloud native because it lets people run different kinds of applications in diverse environments. But for this to happen, they need an orchestration solution that keeps track of all these containers, schedules them and orchestrates them. As a result, Kubernetes has become increasingly popular over time for its scheduling and orchestration capabilities.

The scheduler is a core component of Kubernetes. Once a user creates a Pod, the scheduler monitors the Object Store for unassigned Pods and will assign the Pod to a Node. It runs on the control plane and balances resource utilisation.

Container orchestration becomes a necessity to manage containers and microservices apps at scale. Kubernetes orchestration helps users to build applications that span multiple containers, schedule them across a cluster, scale them and maintain the health over a period of time.

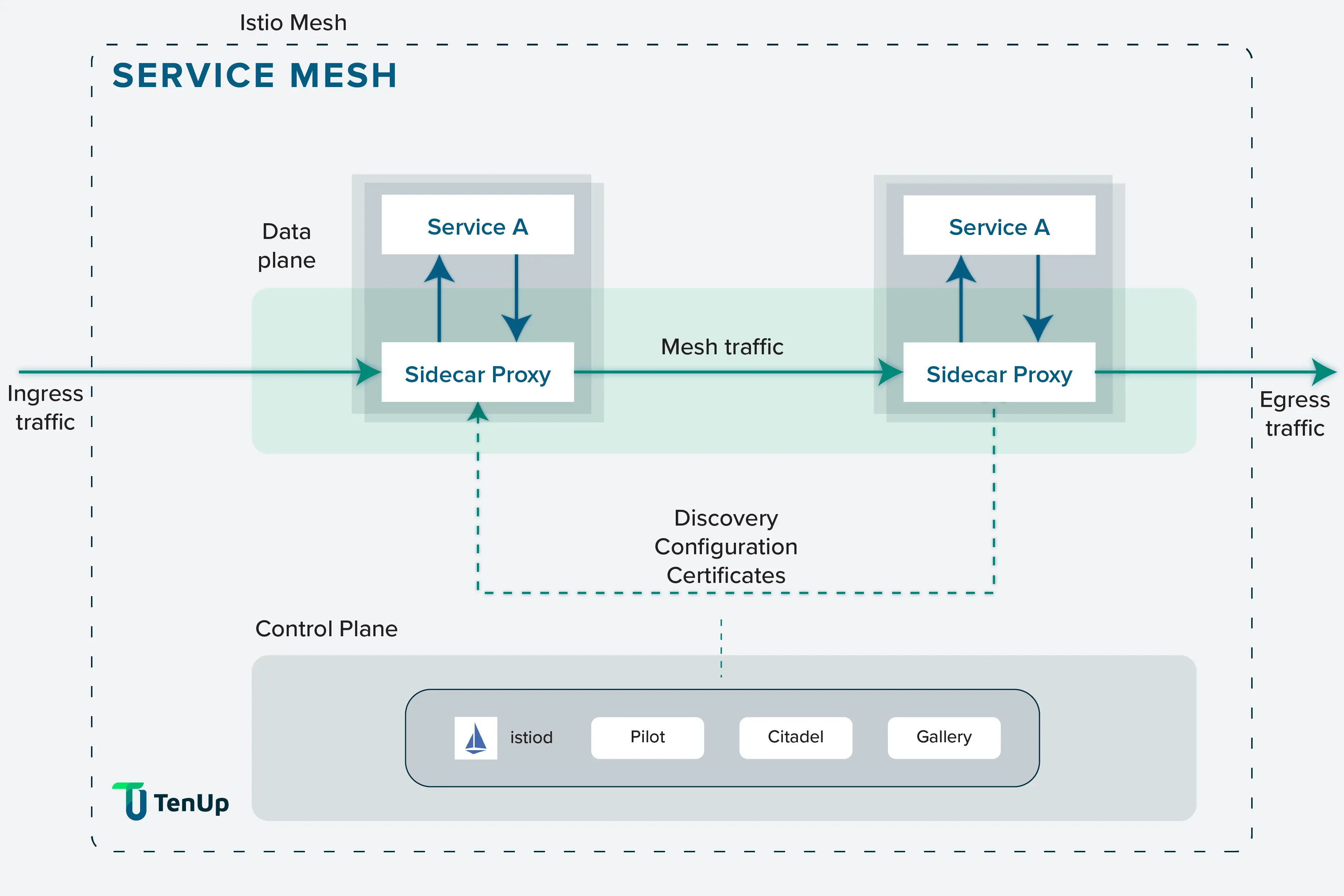

Microservices architecture helps simplify the creation of individual services but there are new challenges to deal with, such as security, network resiliency, communication policy etc., As microservices architecture continues to evolve, interservice communication becomes challenging to manage at scale. This is where service meshes like Linkerd or Istio.io come in. Service meshes use consistent tools to address all the common challenges of running a service like monitoring, networking, and security.

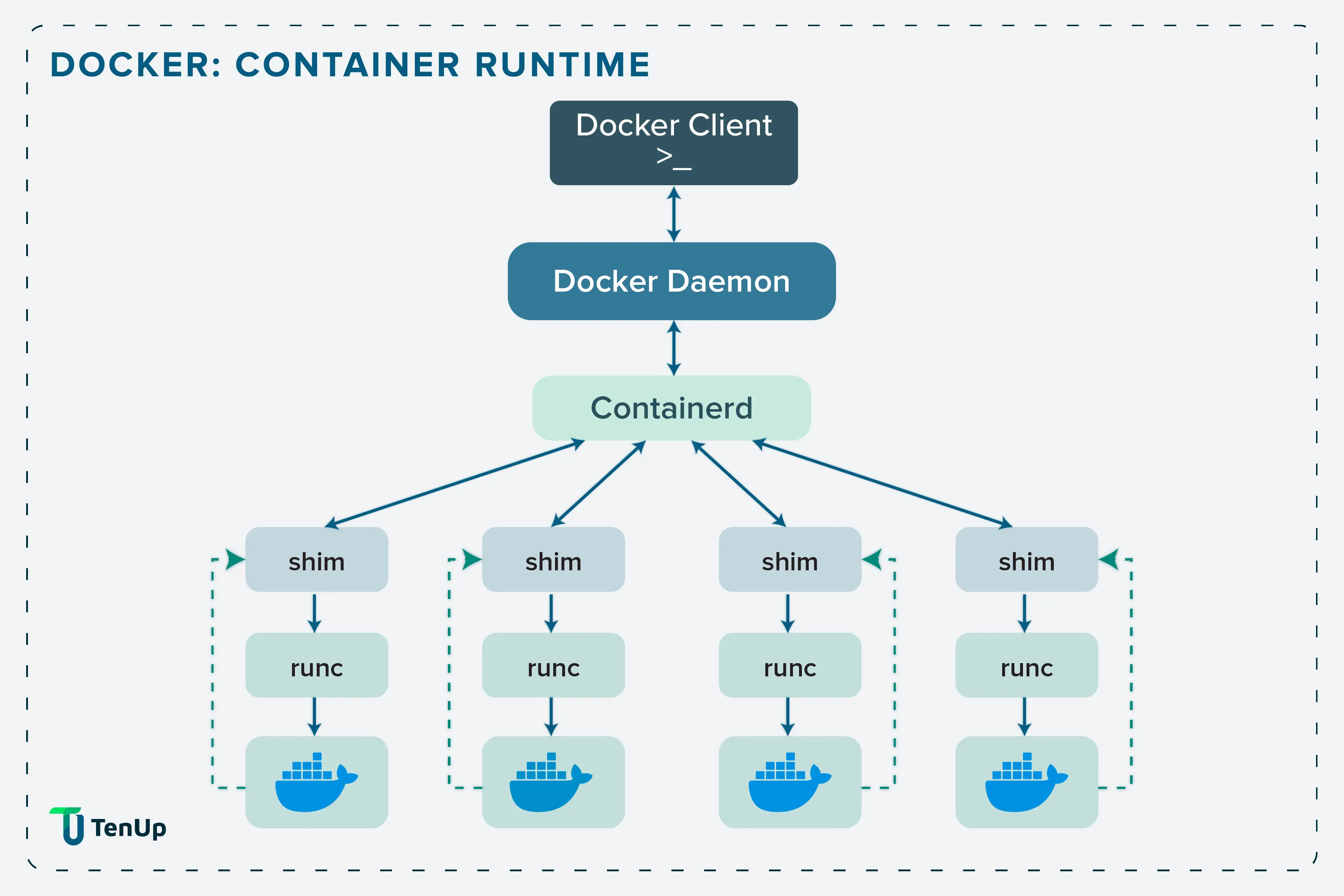

Docker is a platform for packaging and running containers. It helps build standard containers that have all the necessary components needed to function in isolation like code, dependencies and libraries. The chief advantage of using Docker is portability. It allows you to run containers wherever you want, regardless of the host. Also, because individual containers run on their own name space, it offers security. Docker and Kubernetes are often used in tandem as they help accomplish different things.

Because modern, cloud native software development relies on microservices, when a user makes a request within an app, several individual services respond to provide a result. Developers need to keep a track of all the connections and isolate a problem in case of a challenge. This is where distributed tracing comes in. Distributed tracing is often run as part of the service mesh. Jaeger is an open source software that helps users trace transactions between distributed services. It is used to monitor and troubleshoot complex microservices environments.

Fluentd is an open source data collector to create the unified logging layer. Once Fluentd is installed, it runs in the background to gather, parse, transform, analyse and store multiple types of data.

Writing and managing Kubernetes YAML manifests for all the required Kubernetes objects is time consuming and tedious. Applications like Helm simplify this process and create a single package that can be advertised to the cluster. In short, it is a package manager for Kubernetes. Helm helps by improving productivity, reducing complexity of deployments and improving the adaptation of cloud native applications.

etcd is an open source, distributed, consistent key-value store for shared configuration, service discovery, and scheduler coordination of a cluster of machines. It helps to facilitate safer automatic updates, coordinates work being scheduled to hosts, and assists in the set up of overlay networking for containers.

Cloud native applications also need a container registry. This is a private repository for teams to manage images, perform vulnerability analysis and set up access and controls. You can directly connect orchestration platforms like Docker and Kubernetes with container registries. This saves valuable engineer time in building cloud-native applications acting as the channel for sharing container images between systems.

Cloud native apps are gaining fast traction amongst visionary businesses to deliver on the promise of speed, efficiency and reliability. Companies like Netflix, Amazon and Uber have already made great strides using cloud-native strategies. Cloud native app development is one of the most modern, popular, DevOps-friendly approaches in building, maintaining and enhancing apps in today’s fast-paced software-led market.

If you are interested to learn more about cloud native app development, reach out to our solutions expert.