What is Data Labeling? Exploring Types and Techniques for AI Development

The AI development process involves training AI models on preprocessed datasets to ensure the accuracy of their outcomes. Data Labeling (annotation) is an important part of data preprocessing that involves applying meaningful and informative labels to unstructured data. In today’s AI-driven world, adopting an AI-first data labeling approach ensures faster, more accurate, and scalable labeling processes, setting the foundation for robust AI models. Labels guide models to understand the context, categories, and patterns in data as they learn to make predictions or complete designated tasks.

Let’s consider an example of how data labeling is utilized in the healthcare industry. To automate the diagnosis of diseases from medical images, an AI-powered application for medical imaging analysis must be developed. It requires the AI model to be trained on datasets of medical images like X-rays, CT scans, MRI scans, etc. These images must be correctly labeled with the disease names that they help diagnose. For instance, a CT scan showing a brain tumor must be labeled accordingly. So, after the model gets trained on this labeled data it can analyze new, unlabeled medical images and diagnose diseases.

Key Types of Data Labeling for AI: Image, Text, Audio, and Video

The adoption of AI solutions having multi-modal capabilities is increasing across industries to meet diverse use cases. Different types of AI models like LLM models, Vision Models, etc., require varied types of data labeling. Let’s discuss in detail how data labeling helps with different data types like text, image, audio, and video.

1: Image and Video Labeling

Vision AI or Computer Vision data labeling helps AI solutions gain vision data interpretation capabilities (as discussed in the example above). Image Labeling includes assigning appropriate labels to images, whereas Video Labeling adds labels to all the still photos or frames that make up the video. These labels are either informational explaining specific image details or mapping target objects in videos. You can annotate images and videos using the following methods or techniques:

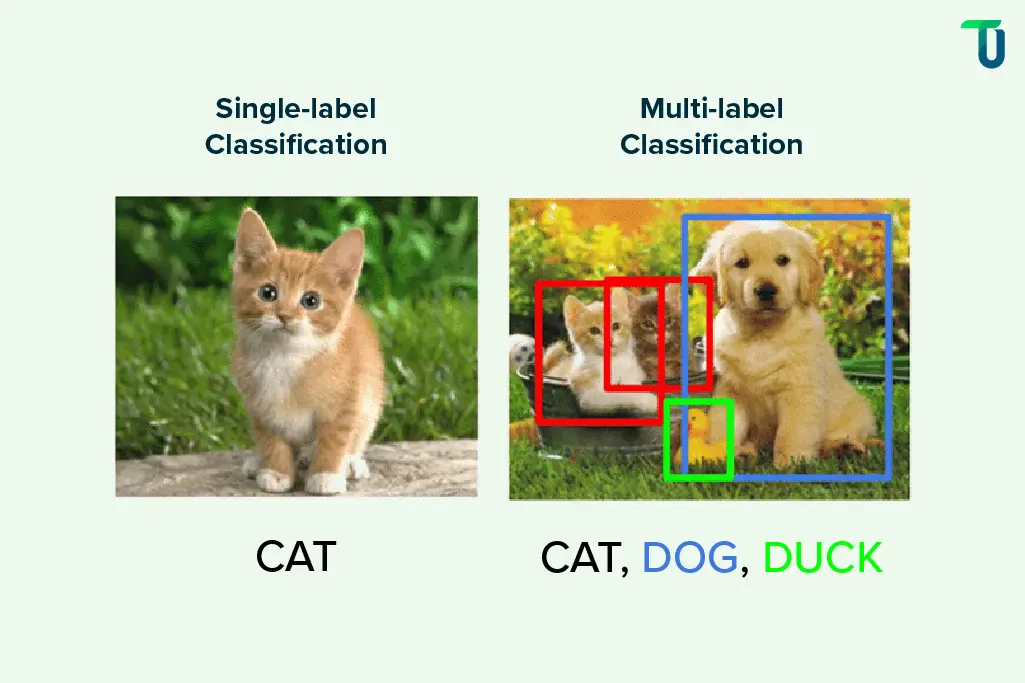

Tagging or Labeling: Assign predefined classes or categories to images for image classification tasks. Use single or multiple-label classification depending on the AI solution’s intended outcomes.

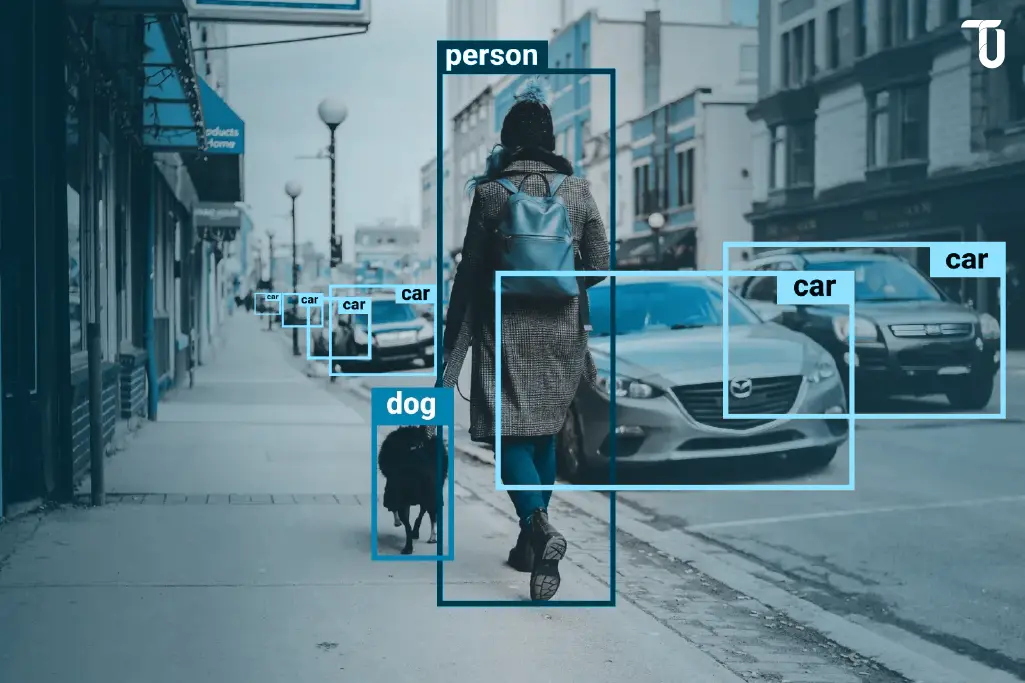

Bounding Boxes: Draw rectangular boxes around objects to show their location in the image. Ideal for Vision AI tasks like Object Detection (for images) and Object Tracking (for videos).

Polygon Annotation: Jot down a series of points around the object in the image to create a polygon, and accurately capture its shape and boundary. Useful for Vision AI tasks like Instance Segmentation and Semantic Segmentation.

Keypoint Annotation (Keypoint Skeleton): Label specific key points on an object in the image to determine position, movement, or spatial relationships. Helps with Computer Vision tasks like Pose Estimation (for images) and Action Recognition (for videos).

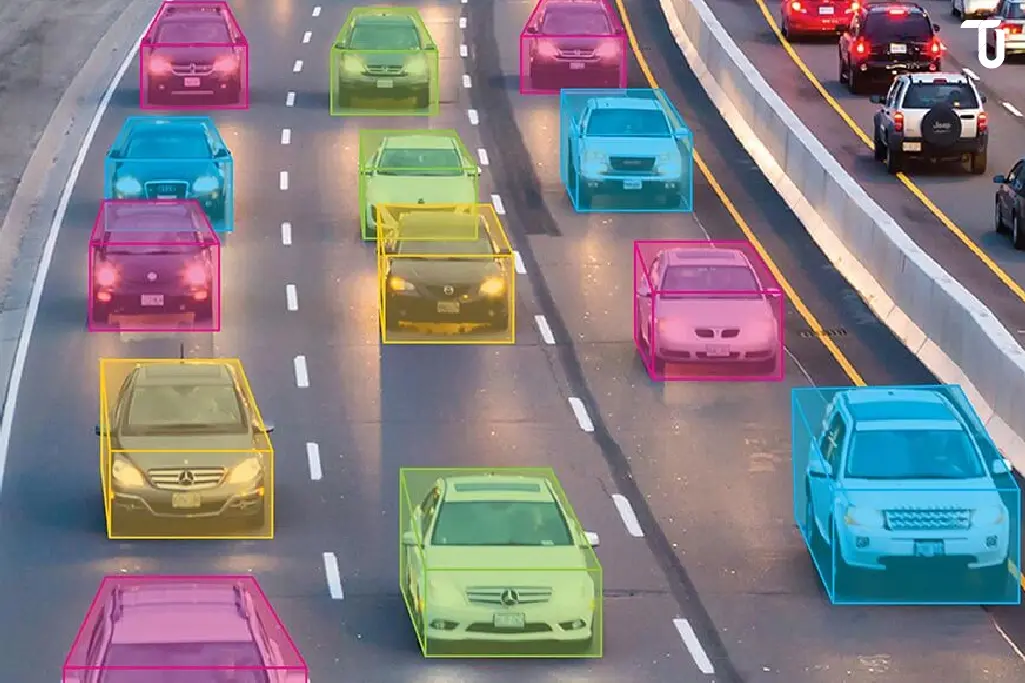

3D Annotation: Create 3D Cuboids around an object in the image to outline its location and spatial extent in 3D space. Ideal for vision tasks like Object Detection and Object Tracking, especially in applications like autonomous vehicles, traffic management, etc.

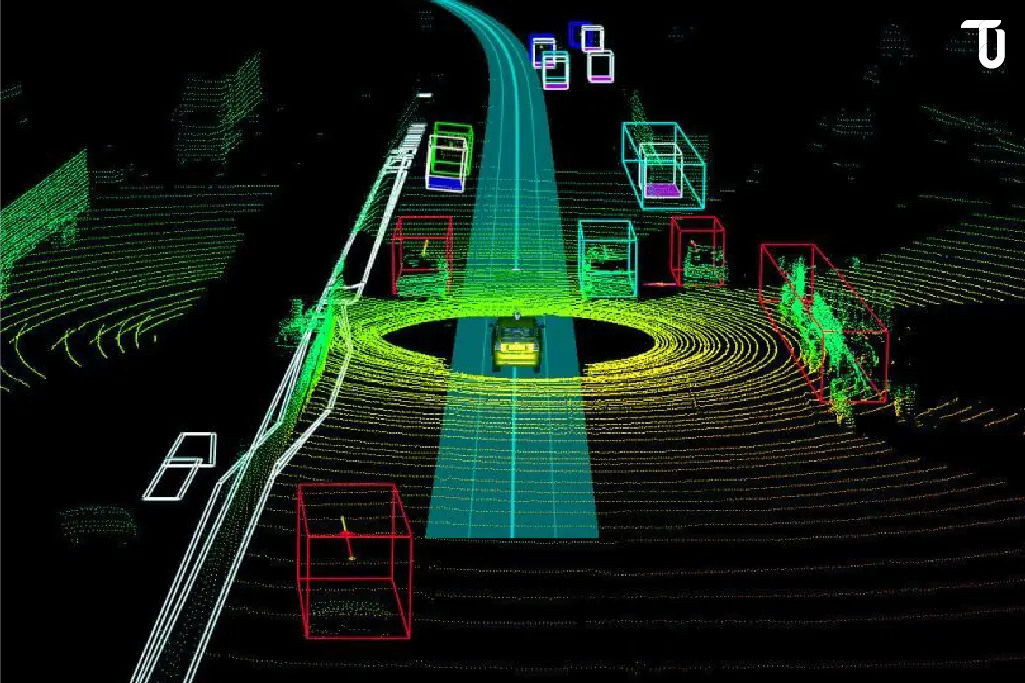

LIDAR Annotation: Use the LIDAR (Light Detection and Ranging) sensor to collect 3D point cloud data, measure distances, and create 3D models of the environment. Helps train AI models for high-precision applications like autonomous vehicles to facilitate safe navigation.

2: Text Labeling

Natural Language Processing (NLP) is the power behind language models that support conversational AI solutions like Chatbots, Question and Answering Systems, Virtual Assistants, etc. The training of language models is done on accurately labeled text data. Here are some text labeling methods:

Text Classification: Add labels to text blocks or documents according to the predefined classes, categories, trends, or any other parameter. Helps improve comprehension, enhances analytics, and saves time in reading documents & messages to categorize them. For example, businesses can easily classify spam and non-spam messages using NLP capabilities.

Sentiment Annotation: Apply labels like positive, negative, and neutral to text to show the sentiment behind the words. Used for Sentiment Analysis, it facilitates identifying consumer sentiment and feedback on products and services early and gives opportunities to improve business offerings.

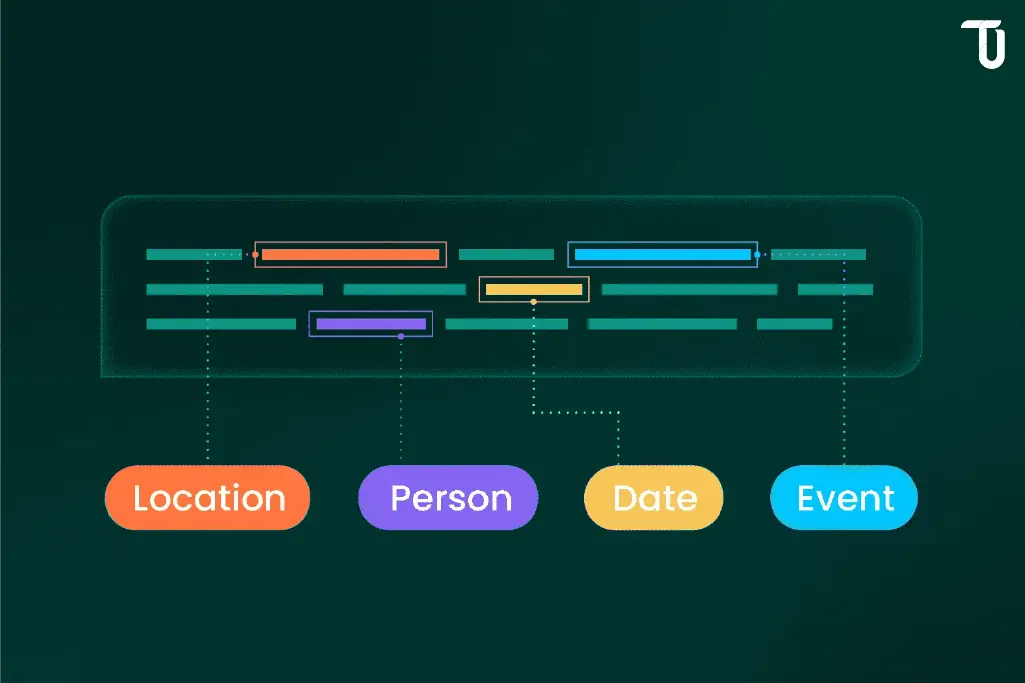

Named Entity Recognition (NER): Identify and classify entities like locations, organizations, persons, dates, products, etc. Helps language models understand the context and ensures important information is not missed during summarization tasks.

Named Entity Linking (NEL): After NER identifies and classifies entities like people, products, locations, etc., they must be linked with data repositories offering more information about them. For example, if the language model reads the sentence: “Paris is the capital of France”, it must understand that Paris is a city and not a person named ‘Paris Hilton’. NEL ensures that the entity ‘Paris’ tagged as a location is linked with a URL giving information about Paris as a place.

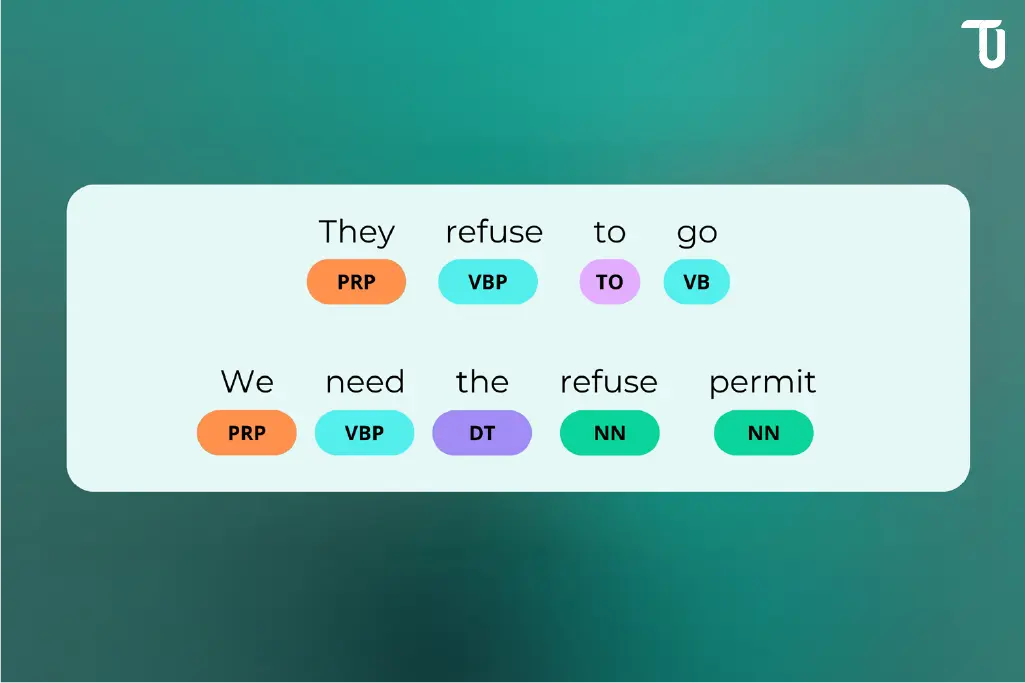

Part-of-Speech (POS) Tagging: Assign grammar-based tags like nouns, verbs, adjectives, prepositions, etc., to all the words in a sentence. Helps language models understand the role of words, the relationship between words, what they mean, and how to apply them in different scenarios.

Topic Modelling: Label text documents with themes, subjects, or topics they discuss to help organize, categorize, and summarize large datasets. Improves customer support by highlighting urgent requests and analyzing customer feedback for specific products and services.

Keyphrase Tagging: Add tags to keywords or keyphrases in text blocks to help with meaningful and contextual summarization of long content and extraction of key concepts discussed in a document.

Intent Classification: Assign labels to highlight the intent of users’ queries and responses to facilitate meaningful conversations with AI solutions like chatbots and virtual assistants.

3: Audio Labeling

Speech recognition tasks enable AI models to decipher the information contained in audio recordings and the audio in video recordings. While humans can effortlessly understand the semantic structure of audio information, it needs to be converted into text for AI/ML to interpret. So, labels and transcripts must be assigned to audio recordings in the required format to train AI models.

Audio Classification: Categorize the audio into speech and non-speech, where the speaker’s voice falls under the speech category. Sounds like background noise, music, etc., and silence are classified as non-speech. You could have additional categories like the number of speakers, the language spoken, the intent, etc.

Audio-transcription Annotation: Convert audio of spoken words into text (transcript). Use NLP or Text labeling as mentioned above to add further information to the textual transcript of speech.

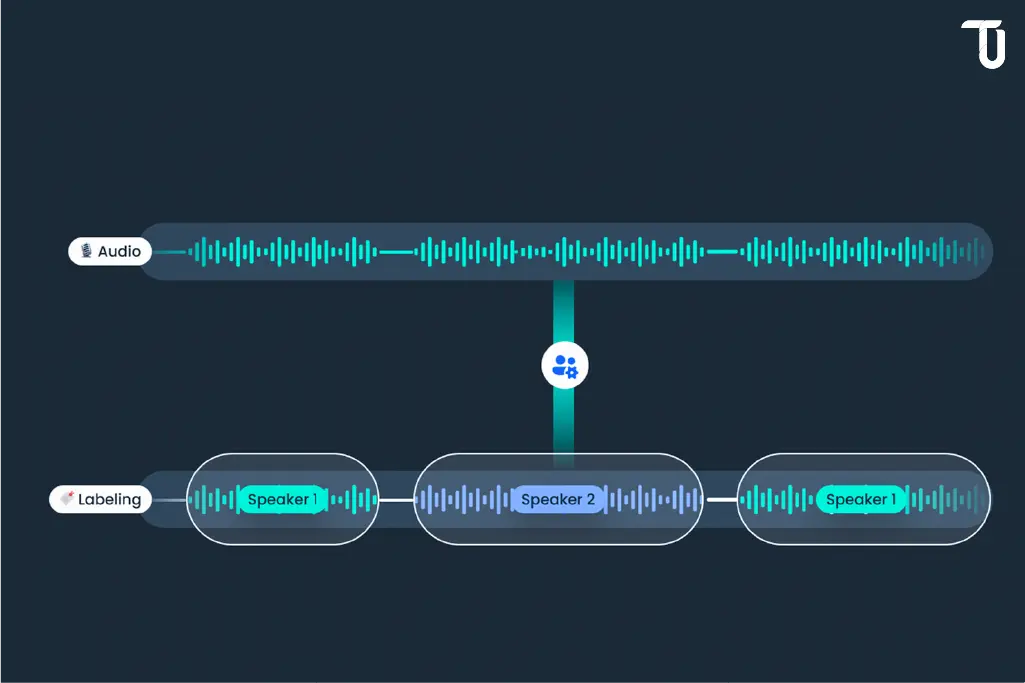

Speaker Diarization (Speaker Identification): Break down the audio into segments and label each segment with the correct speaker’s identity. Helps when an audio involves multiple speakers and the AI model must understand who spoke what.

Audio Emotion Annotation: Taking clues from speech rate, voice intensity, pitch levels, etc., identify and tag the speaker’s emotions like surprise, happiness, fear, sadness, etc.

Want to Build AI Solutions with Multimodal Capabilities?

Leverage our experience utilizing multimodal models to build versatile applications that deliver accurate outcomes for multiple use cases.

Why the Right Data Labeling Tools Are Crucial for AI Success

The data labeling process in AI development is usually tedious, and time-consuming. It requires trained and experienced labelers (annotators), and sometimes even subject matter experts, to create high-quality data to train the AI model. Data labeling accuracy becomes more important in cases where poor-quality training data actually degrades the AI model’s performance. Automated tools speed up the process and enhance the accuracy of data labels with human-in-loop techniques. By embracing an AI-first data labeling strategy, organizations can leverage advanced algorithms to handle repetitive tasks, reduce human error, and scale labeling efforts efficiently. So, by using appropriate tools to perform repetitive tasks, annotators can focus on more complex labeling issues that cannot be solved without human intervention.

By utilizing a mix of data labeling tools and human annotators, you can resolve the most common challenges in manual data labeling. These include:

Scalability: Labeling large datasets using only manual labeling techniques becomes impractical considering the time required and the cost of paying human labelers, making tool utilization essential.

Handling Noisy Data: Tools help clear the noise in unorganized data to facilitate better data labeling results. While manual data preprocessing can solve this issue, the time and effort required increases.

Budget Constraints: Even with tools, expert human annotators are required to resolve complex issues. Also, if the entire data labeling job is completed manually, the project costs increase, making it difficult to stay within the budget.

How to Choose the Best Data Labeling Tools for Your AI Project

As data labeling requirements change with every AI project, so should the tools you utilize. An AI-first data labeling approach ensures that the tools you choose are equipped with machine learning capabilities to automate repetitive tasks, improve accuracy, and adapt to evolving project needs. Based on our experience, we’ve shortlisted the key factors to consider while selecting the data labeling tools for a particular AI/ML project:

Labeling Type: Your chosen tool must expertly support the required annotation type. Different platforms specialize in varied data labeling types. For example, CVAT focuses on image and video labeling, whereas Tagtog emphasizes text labeling. Other popular platforms offering support for multiple labeling types include Label Studio, Labelbox, Prodigy, Amazon SageMaker Ground Truth, SupperAnnotate, etc.

Management and QA: The tool must allow managers to create the project and track progress. It should support a quality assurance process, where managers can ensure high-quality annotation outcomes by conducting quality checks and validations and coordinating with annotators to improve poor-quality labels.

Ease of Use: The tools must allow multiple annotators to work simultaneously without disturbing each other’s work. It must provide intuitive features and utilize AI/ML to increase the efficiency of creating high-quality annotations. Take demos and free trials of all your shortlisted tools to check if they are easy to use.

Integration with Your Tech Stack: As data labeling is one of the tasks of data preprocessing, your chosen tool must integrate with the rest of your tech stack to ensure a seamless data pipeline and operations. Check the APIs, SDK, etc., that the tool uses to ensure you will not face integration issues.

Security and Data Privacy: The data labeling platform you select must offer enterprise-grade security, access control, and data protection. If your industry-specific data demands compliance with certain regulations, you must ensure your chosen tool supports them.

Assistance and Support: The data labeling tool must provide accurate technical documentation so that you can optimally utilize its specific features and built-in mechanisms. Also, in the event of downtimes or bottlenecks, which is inevitable, their support team must respond quickly and provide satisfactory resolutions.

Price: As you need to complete the data labeling process within a budget, comparing the pricing plans of different tools is undoubtedly one of the most important factors to consider. You can compare the features and other ad-ons that different open-source and paid platforms provide and create a data labeling strategy accordingly.

Facing Challenges with Data Labeling?

Our experienced team can analyze your needs and create a data labeling strategy along with a team of expert annotators and suitable tools.

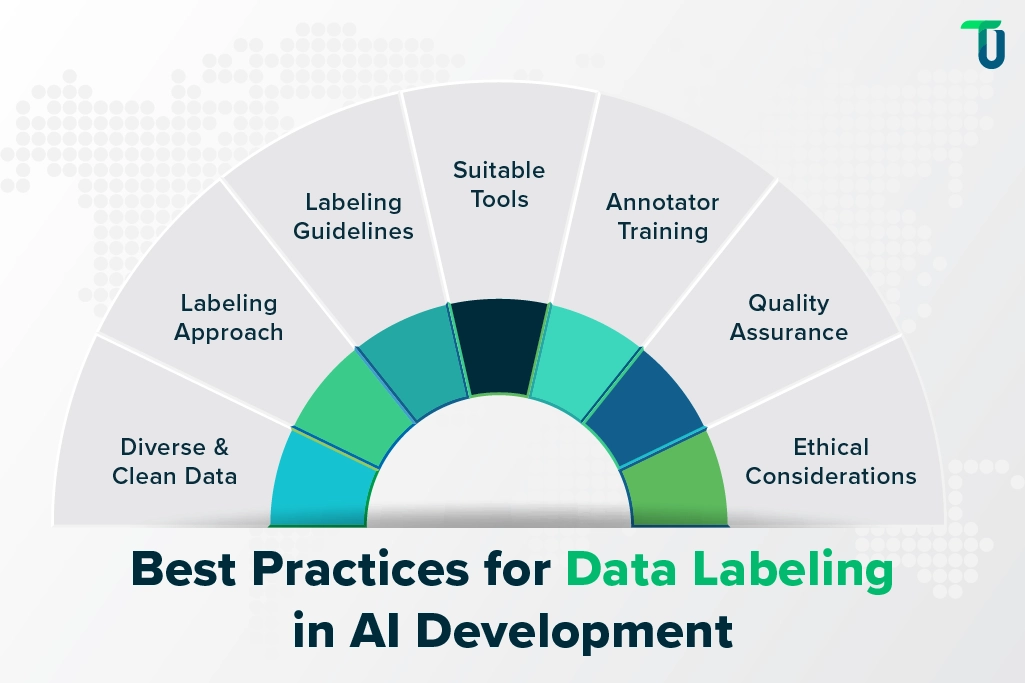

Top Data Labeling Best Practices for Accurate AI Model Training

Here are some of the best practices in Data Labeling we follow at TenUp to ensure we deliver desired results with our AI development projects. Adopting an AI-first data labeling mindset is key to streamlining workflows, improving accuracy, and scaling operations effectively. Adopting an AI-first data labeling mindset is key to streamlining workflows, improving accuracy, and scaling operations effectively :

Diverse & Clean Data: Collect varied data samples to cover all aspects of your intended use cases and train the AI/ML model comprehensively. Remove bias and potentially harmful content from collected data before it goes through the labeling process to avoid biased and negative labels.

Labeling Approach: Choose between manual, semi-automated, or automated approaches to create data annotations based on your AI/ML project requirements concerning timelines, budget, and resources. The semi-automated approach combines manual and automated approaches and the automated approach implies utilizing ML models to label data.

Labeling Guidelines: Create detailed instructions specifying the labeling types required, what and how to label, and quality standards that must be followed, to avoid pitfalls like inconsistent, unclear, subjective, and biased labeling.

Suitable Tools: As discussed earlier, using the most appropriate tools enhances the speed and accuracy of your data labeling process. Ensure you have a clear roadmap of how and when you will utilize them with human-in-loop techniques to complete labeling tasks within the decided timeframe and budget.

Annotator Training: Even if you’ve hired experienced annotators or labelers, they must be trained on the nuances of your unique project requirements. After initial training, interactive training sessions must follow to keep them up-to-date with the improvements in methods and processes in data labeling as your project advances. Establish a feedback loop for quick query resolution and instant issue fixing.

Quality Assurance: Establish an effective quality assurance process. It must include tasks like assessing and improving the quality of labels created, resolving disagreements or subjective opinions on particular labels among the annotators, and iteratively refining the labeling process to eliminate errors and inconsistencies.

Ethical Considerations: Follow correct consent procedures when annotating sensitive, personal data to ensure privacy and security of individual information contributors. Ensure the fairness and integrity of labeled data by eliminating bias and promoting equal representation of different viewpoints.

Achieve AI Success with AI-First Data Labeling Tools and Strategies

Now that you know that an AI solution is only as smart as AI engineers make it, we hope that you will not underestimate the importance of data labeling for AI development success. By adopting an AI-first data labeling approach, you can ensure faster, more accurate, and scalable AI model training, setting the stage for long-term success. By adopting an AI-first data labeling approach, you can ensure faster, more accurate, and scalable AI model training, setting the stage for long-term success. We’ve discussed how using the right tools improves the accuracy and speed of your data labeling process. But you need an experienced AI development partner to choose the right tools along with a winning data labeling strategy and approach. Our experience has taught us that every project is unique and requires customized solutions. See how our comprehensive AI Services and Solutions deliver tangible benefits to our clients.

Facing Time and Budget Constraints in AI Development?

We can build a customized strategy and solution to meet your project requirements and ensure customer satisfaction.