Why LLM Evaluation and Observability Are Critical for AI Success

LLM-based applications usually integrate Retrieval Augmented Generation (RAG) systems and AI Agents, increasing their complexities. LLM Evaluation and Observability become critical to ensure you get high-quality AI solutions that are accurate, reliable, and meet your business goals and the needs and expectations of your system’s end-users. When done successfully, LLM Evaluation and Observability can help minimize costs, improve security, fix bugs, and enhance the performance of your LLM-based application.

LLMs heavily depend on context and complex processes in the application. Also, they provide non-deterministic outputs, meaning there are multiple ways to generate a correct and acceptable response. Responses of LLMs can be evaluated in the development stage by testing them on extensive datasets of questions and answers. But when the LLM-based application moves into the production environment, there is no control over what kind of user questions it will encounter and how it will respond. That’s why most issues arise in the production stage.

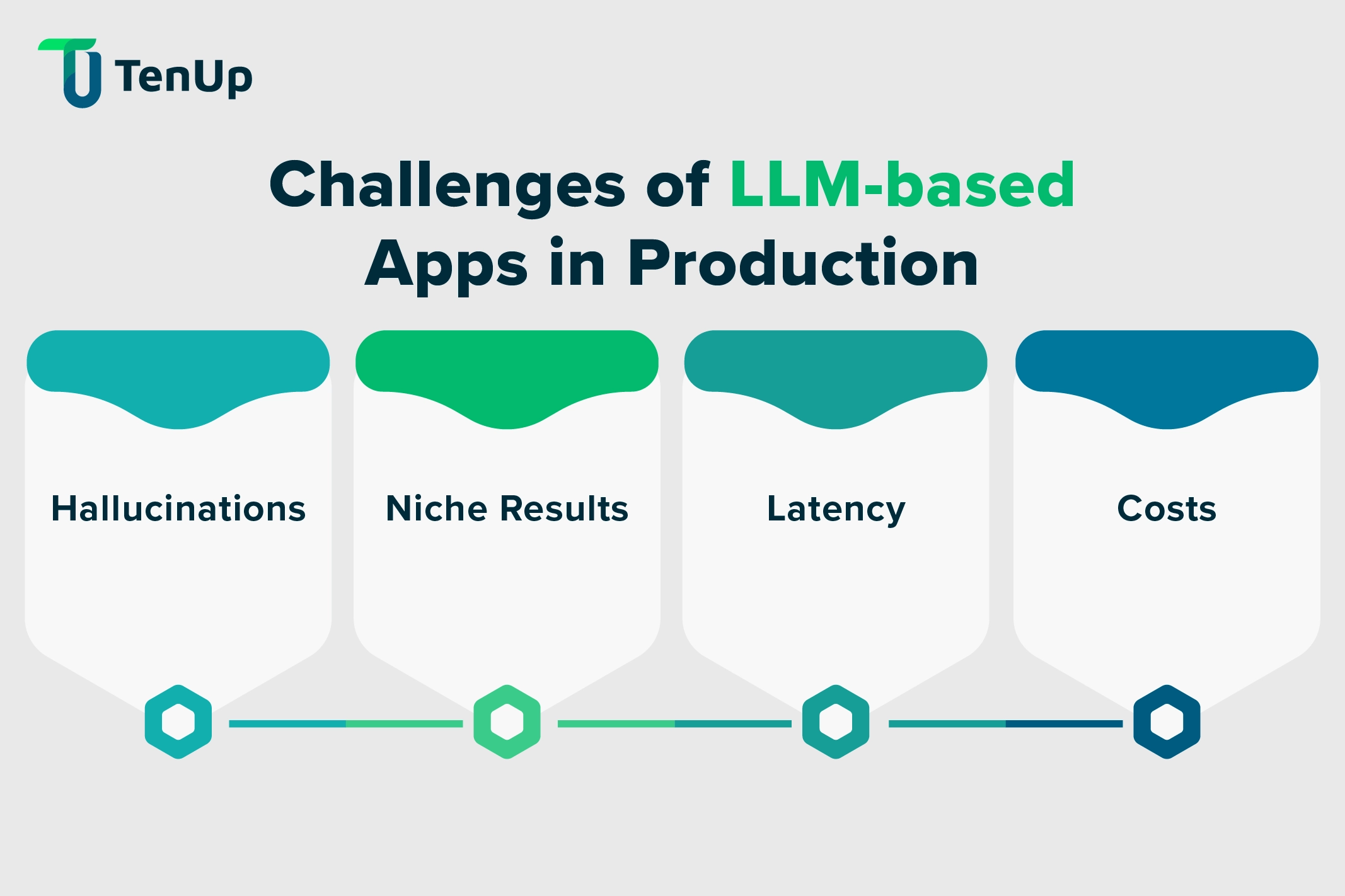

The most common challenges LLM-based applications face in production include:

Hallucinations: LLMs are known to generate fabricated and factually incorrect information when they fail to understand a prompt and correctly respond to it, called hallucinations. This adversely impacts their reliability.

Niche Results: While foundational models are used to build most AI systems, what sets your application apart from your competitors is the level of customization. If your chatbot cannot answer niche queries of your users, you are not getting value for your money.

Latency: The speed of response impacts user experience in systems like Chatbots. This challenge comes to the fore in the production phase when users interact with the system. So you need to identify areas causing the delay.

Costs: LLMs process natural language by encoding it as sequences of tokens. Tokens are spent when LLM calls are made. And if the expensive LLM invocations are not optimized, the cost of the LLM-based application increases.

This list of challenges is just the tip of the iceberg. AI developers are well aware of the horrors they face in production. That’s why an experienced AI developer will never underestimate the need for LLM Evaluation and Observability.

Looking for AI Development Experts?

We have a team of skilled and experienced AI developers capable of building reliable LLM-based applications.

What is LLM Evaluation? Metrics, Tools, and Approaches

LLM Evaluation is the process of assessing the performance, accuracy, and reliability of Large Language Models (LLMs) using metrics-based frameworks. It ensures LLMs meet business and user requirements by benchmarking outputs against predefined standards.

LLM Evaluation involves a complete assessment of the resources, capabilities, and performance of the LLM-based application. It determines if the application meets the expectations based on the intended use cases.

Metrics-based evaluations clearly outperform traditional evaluation methods, and are currently preferred. Tools and frameworks like Phoenix, Deep Eval, Trulens, etc., automate these evaluations by assigning reference scores based on specific benchmarks. Evaluation scores of the LLM-based application are compared against the reference scores to gauge its performance.

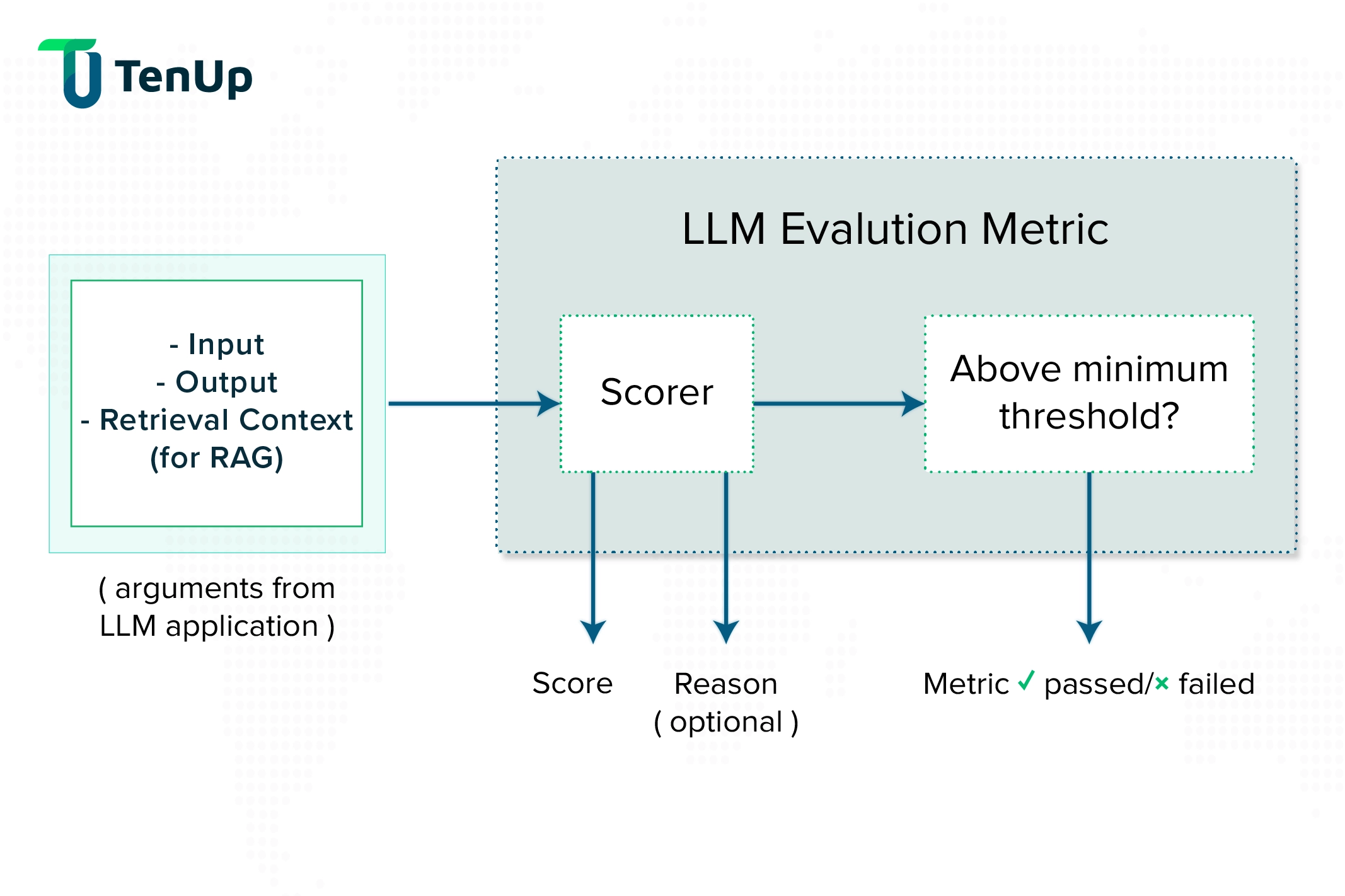

See the below image illustrating how the LLM’s input, output, and retrieved-context quality is evaluated and scored using a specific metric and benchmarked reference score.

In the development stage, evaluations help assess the overall readiness of the language model to move into production. The process starts with selecting the right foundational models for specific applications and training them to achieve the desired outcomes.

The language model’s performance, accuracy, fluency, and relevance are evaluated, and results are used to improve their training. After the training, the LLM is evaluated again. This process continues in a loop till the LLM is production-ready. The aim is to push the boundaries of the LLM to meet the high industry and business-specific expectations.

When LLM moves into production or when it integrates with the application, LLM Evaluation becomes a part of the broader LLM Observability process. At this stage, the focus is on evaluating the components behind the efficient working of the application in real-life situations.

As every AI application intends to meet different use cases, there is no one-size-fits-all evaluation solution. So, automated metrics-based evaluations need to be tailored for the application. Different frameworks and tools allow for such customizations by offering a wide range of evaluations based on different metrics.

Top Metrics for LLM Evaluations: Measure AI Performance Effectively:

- Context Relevance: Determines if the retrieved context is related to the user’s query.

- Answer Relevance: Evaluates if the LLM output relates to the user’s question and is helpful.

- Groundedness/Faithfulness: Checks if the LLM output aligns with the retrieved context.

- Hallucination: Checks if the language model generates factually correct information.

- Toxicity: Ensures the response does not contain any offensive or harmful content.

- Bias: Ensures the LLM response is clear of any kind of bias like age, gender, race, politics, religion, etc.

- Summarization: Evaluates the results of the summarization task on factual correctness and language accuracy.

- AI vs Human (Groundtruth): Identifies strengths and weaknesses of LLM-generated outputs by comparing them with human-generated answers.

- Conversation Completeness: Evaluates if the LLM completed the conversation with the user with satisfactory responses.

- Conversation Relevancy: Check if all the responses within the conversation were related to the user’s queries.

- Knowledge Retention: Examines the level of factual information retained by the LLM from the conversation with the user.

This is not a comprehensive list of metrics used for LLM evaluations. Depending on the approaches and methods adopted by developers, they could use many different metrics.

Some approaches for LLM evaluation include user-centric evaluations, task-specific evaluations, system-specific evaluations, ethical and fairness evaluations, interpretability evaluations, adversarial evaluations, etc.

Apart from metrics-based evaluations, developers also use other methods like LLM-based evaluation, where another more capable LLM is used to evaluate the LLM-based application.

Poor Chatbot Responses Troubling You?

You need AI experts to identify your language model’s performance issues and fix them to align better with your niche.

LLM Observability: How to Monitor and Optimize AI Systems

LLM Observability is the practice of monitoring and analyzing LLM-based applications in real-time to ensure optimal performance. It provides visibility into inputs, processes, and outputs, helping businesses fine-tune prompts and improve response quality.

LLM Observability is an approach or practice of gaining complete visibility into the behavior and responses of an LLM-based application. It involves continuous monitoring, logging, tracing, and analyzing the operations and performance of the app’s every layer (Processes, Prompts, and Outputs).

Its purpose is to collect data on how everything works within the LLM-based application, gain valuable insights, and help optimize things like prompt fine-tuning or model adjustment to improve the performance of the system.

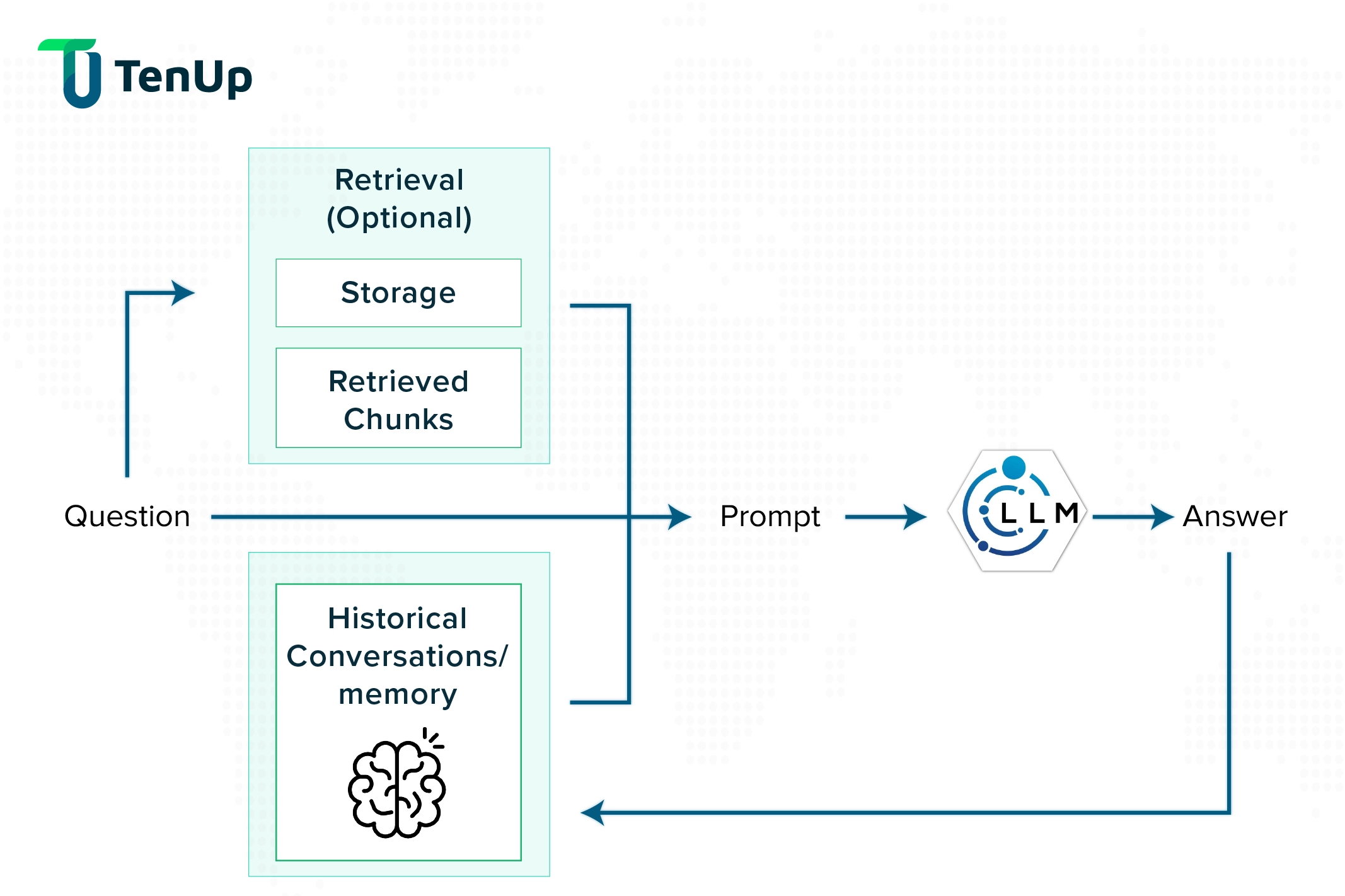

Let’s look at a simple example. Below is an illustration of how a chatbot works.

As seen in the above image, when a user asks a question to the chatbot, the system retrieves the information to enrich the prompt along with the question and the context of historical conversations, and finally, the LLM generates a response. So, if the chatbot doesn't give a satisfactory response, the failure point could be at any stage in the process.

To implement LLM Observability for this system, we’ll have to:

- Instrument the system to log every activity, monitor every event, and evaluate every response in an investigative approach.

- Enable the system to distinguish between good and bad requests and responses. This will build a database over time, highlighting the strengths and weaknesses of the system.

Essential Components of LLM Observability: Optimize AI Systems

In addition to the traditional observability components of metrics, events, logs, and traces (called MELT), LLMs also need the following for accurate observability:

User Feedback and Prompts: Analyzing user feedback is the fundamental method to check if the system is providing satisfactory responses. So, it is critical to store user feedback even if it's just a small gesture like a thumbs-up.

Using the right prompt concisely is the key to improving LLM responses. Lengthy and ineffective prompt templates increase token usage, and consequently your costs. Also, LLMs allow for limited context, meaning prompt templates must be optimized. Users also give prompts to LLMs, so log every prompt and the resulting response to know which prompts provide desired responses.

Traces and Spans: The record of the path taken by a request as it passed through different stages within the LLM application is called a ‘Trace’. So, it basically tells the story of what happened in the application between a user/system request and the LLM response.

A ‘Span’ is a record of a single operation among the multiple operations that took place within the application during a Trace. Spans give granular visibility into what happened when an operation got triggered/executed, and help identify performance bottlenecks.

Retrievals: To increase the level of customization and enable your LLM-based application to answer niche questions, you need to feed it with your knowledge base. This is usually done by integrating a RAG system, which adds to the complexity of the application’s workflow. Analyzing retrievals is critical for LLM Observability to understand the relevancy of the information returned.

Evaluations: While we collect datasets based on the discussed components, this collected information needs to be evaluated to identify performance bottlenecks. Developers need to thoroughly evaluate the LLM-based application to eliminate all the challenges faced in the production stage and beyond. We’ve already discussed how evaluations work in the above section.

Fine-tuning: Ultimately, you need to fine-tune your language model by implementing the findings and recommendations of the LLM Observability process. It creates new versions of models that further require observability to ensure high performance.

The LLM Observability process involves all the above discussed components. Developers utilize various tools like Arize Phoenix, TruLens, DeepEval and Confident AI to facilitate and speed-up observability for your LLM-based applications.

Conclusion: Get Reliable AI System with LLM Evaluation and Observability

We’ve already discussed the challenges LLM-based applications face in production and how Evaluation and Observability help in overcoming them. With our detailed explanation of these concepts and how they work, their importance cannot be emphasized more. In fact, their need increases with the complexity of your AI System. So, a highly complex AI system with LLM, RAG, AI Agents, and Agentic Networks will require more robust execution of evaluations and observability.

Explore our comprehensive Artificial Intelligence Services with a focus on delivering custom solutions tailored to our clients’ specific needs and expectations. With a team of experienced and skilled AI developers and solutions architects, we’ve integrated custom AI solutions into our clients’ systems. Check out our case studies here.

Need to Build an LLM-based Application?

Look no further! We are your reliable tech partner to build complex AI solutions customized to your domain or niche.