What is Stable Diffusion and How it Works?

Introduced in 2022, Stable Diffusion is an open-source, Latent Diffusion Model (LDM) created by Stability AI, LMU, CompVis, and other collaborators. It uses text prompts or image prompts with text descriptions to generate high-resolution images, videos, and animations.

The Stable Diffusion model is usually utilized as a framework to build generative art systems and other AI tools like Artbreeder, NightCafe Studio, etc., that aim to meet the use cases of text-to-image and image-to-image generation. So, when the user provides a text prompt or an image plus text prompt, the Stable Diffusion-based AI system generates a high-quality image matching the input description.

Even though diffusion models have been around for quite some time, what’s so special about Stable Diffusion that it transformed the text-to-image generation landscape?

Most diffusion models like Google’s Imagen or Open AI’s DALL-E operate in the Image Space (also called Pixel Space). Meaning, a 512×512 image with three color channels becomes a 786,432-dimensional space requiring individual values in the Image Space. This is too much computing, right? Much beyond something a single GPU can handle, thus requiring immense computational power.

Stable Diffusion, however, operates in the compressed, low-dimensional, Latent Space. The size of image representation in the Latent Space is only 4x64x64, which is 48 times smaller as compared to Image Space. This increases the model’s processing speed and reduces computational and memory requirements. Thus, the model can run on any laptop or desktop equipped with a GPU (Graphics Processing Unit). No wonder the commercial adoption of Stable Diffusion has increased exponentially.

Core Components of the Stable Diffusion Model

To understand how Stable Diffusion works, let’s explore some core concepts and its key architectural components:

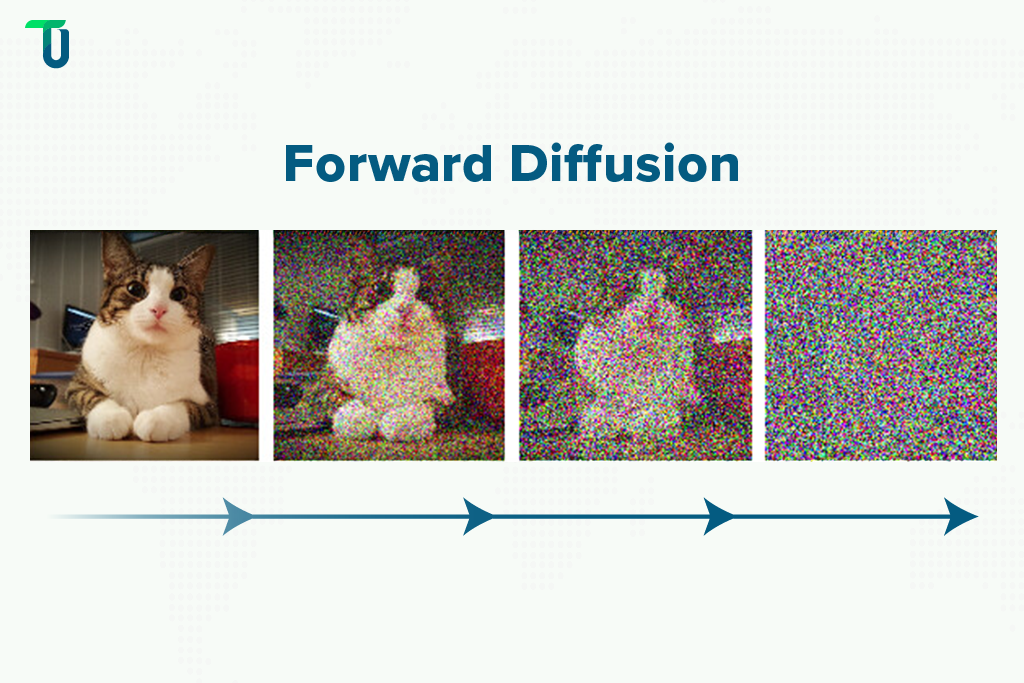

Forward Diffusion: This process is used to train a diffusion model on different images. As seen in the image of a cat below, noise is gradually added till it turns into an uncharacteristic noise image. Billions of images are turned into noise images and used to train the model. The Stable Diffusion model uses this random noise as the starting point when generating an image from a text prompt, which we shall discuss later.

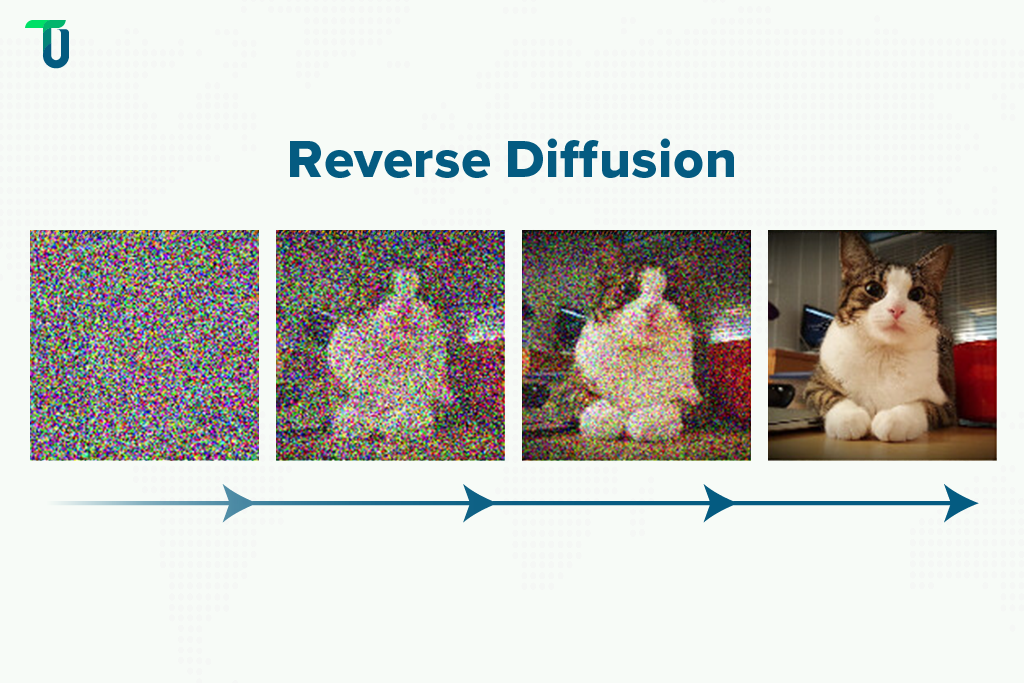

Reverse Diffusion: Reversing the diffusion created above involves clearing the noise (using a Noise Predictor) and restoring the original image as shown in the picture below. Only, in the case of Stable Diffusion, the noise is cleared based on the directions given in the text prompt so the output is just as the user described it. For example, if the user asks for a picture of an astronaut sitting on a bicycle, the model’s output gives an image depicting the same.

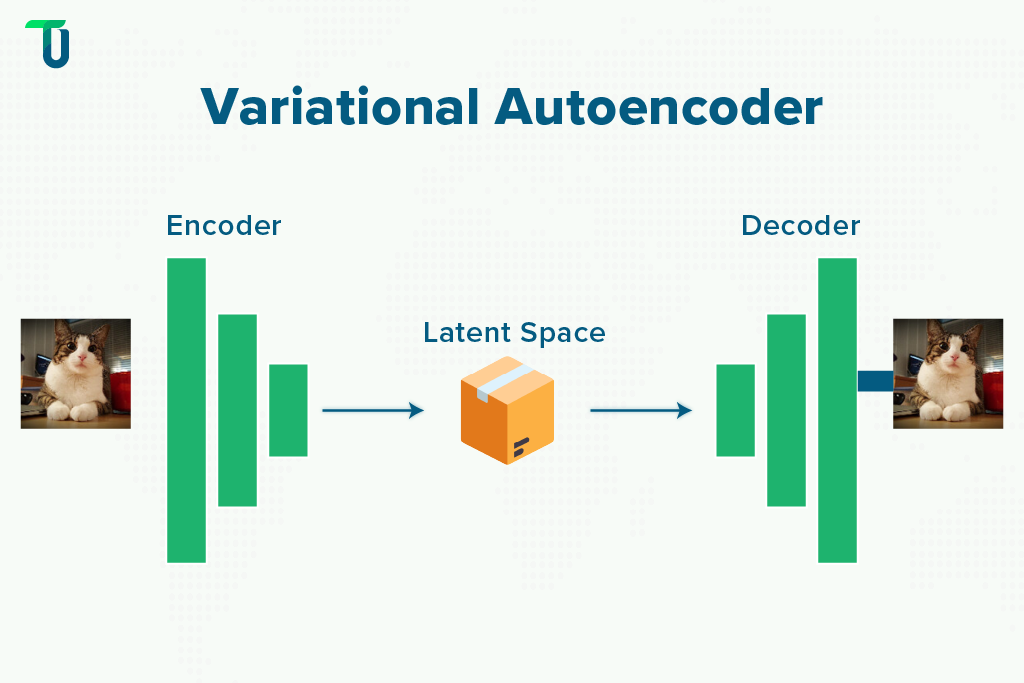

Variational Autoencoder (VAE): It is one of the key architectural components of the Stable Diffusion model, consisting of two parts- an Encoder and a Decoder. As Stable Diffusion operates in the comparatively smaller Latent Space, the Encoder compresses a regular image to a lower dimensional representation. Instead of creating a noisy image to train the model, its representation is generated using Latent Noise (Forward Diffusion).

When the user provides a text prompt, the Stable Diffusion model uses this Latent Noise as a starting point and applies Reverse Diffusion to generate the desired image. The Decoder restores this newly generated image from the Latest Space back to the Image Space and delivers a high-quality output to the user.

U-Net (Noise Predictor): During the Reverse Diffusion process, a Noise Predictor is used to iteratively remove the noise from the training image. Stable Diffusion uses a U-Net model, specifically the Residual Neural Network (ResNet) model developed for computer vision, as a Noise Predictor. After estimating the amount of noise in the Latent Space, the U-Net model reduces noise based on the user-specified description to generate the final image.

Text Conditioning: The user’s text prompt conditions the Noise Predictor to reduce the noise in such a way that the result is the desired image. Stable Diffusion uses a CLIP tokenizer (OpenAI’s Deep Learning model) to convert each word in the text prompt into a number (called Token), and further into a 768-value vector (called Embedding). A Text Transformer processes these vector embeddings and feeds them into the Noise Predictor (U-Net model) for it to understand the description given by the user in the text prompt.

Based on the core components we’ve discussed above, here’s a step-by-step breakdown of what happens under the hood in Stable Diffusion for text-to-image generation:

- The Encoder compresses the training images to train the model in the Latent Space and adds Latent Noise using Forward Diffusion.

- The User provides a text description (prompt) to create a specific image.

- CLIP tokenizer embeds the text prompt and the Text Transformer feeds it to the Noise Predictor (U-Net model).

- Using Reverse Diffusion, the U-Net model reduces the noise as per the user’s prompt and generates the required image.

- The Decoder restores the image from Latent Space to Image Space and delivers it to the user.

Looking to Leverage Stable Diffusion for Your Business?

After understanding your specific business needs, we will design and develop a personalized solution to enhance your operations.

Popular Applications of Stable Diffusion

The Stable Diffusion model has a variety of applications in AI-based solutions intended for different use cases. Here's what it offers:.

Image Generation: You can create customized images using text prompts or conditional inputs. it facilitates both text-to-image and image-to-image generation.

Video and Animation Creation: Short video clips or animations can be created using different design styles and prompts with Stable Diffusion.

Image Super Resolution: It helps turn low-resolution images into high-resolution ones by improving their details, sharpness, overall quality, and resolution.

Domain Adaptation: A model trained on one domain can be adapted to perform well on another by transferring image characteristics or styles from the source to the target domain.

Image Inpainting: It helps reconstruct missing or corrupted details of an image to create complete, coherent visuals.

Image Outpainting: Bigger images can be generated by expanding the images outside their borders creating continuity.

Here’s how businesses across industries utilize Stable Diffusion-based AI applications or systems:

Creating Digital Media: Artists utilize it to generate concepts, illustrations, sketches, storyboards, etc. Media studios use it to cut costs in creating art for book covers, games, and films. It is also used to generate videos and animation.

Enhancing Apparel Design: Fashion designers use it to experiment with different color and print variations and design options to create unique apparel designs. They also use it to show their designs to clients in digital format.

Improving Product Design: Be it designing a software or hardware product, it helps visualize hypothetical products by providing descriptions. This accelerates early-stage ideation and facilitates 3D rendering.

Advertising and Marketing: These agencies use Stable Diffusion to create on-brand content including iterations of product images, social media posts, and even lifestyle scenes. This cuts costs on expensive photo shoots.

Medical Research: Researchers can identify patterns in genomic sequences or molecular structures by transforming abstract data into more intuitive visuals using Stable Diffusion. It also helps with data augmentation to train AI/ML models by generating a variety of medical imaging scenarios, like different stages of a disease.

How Stable Diffusion Benefits Businesses?

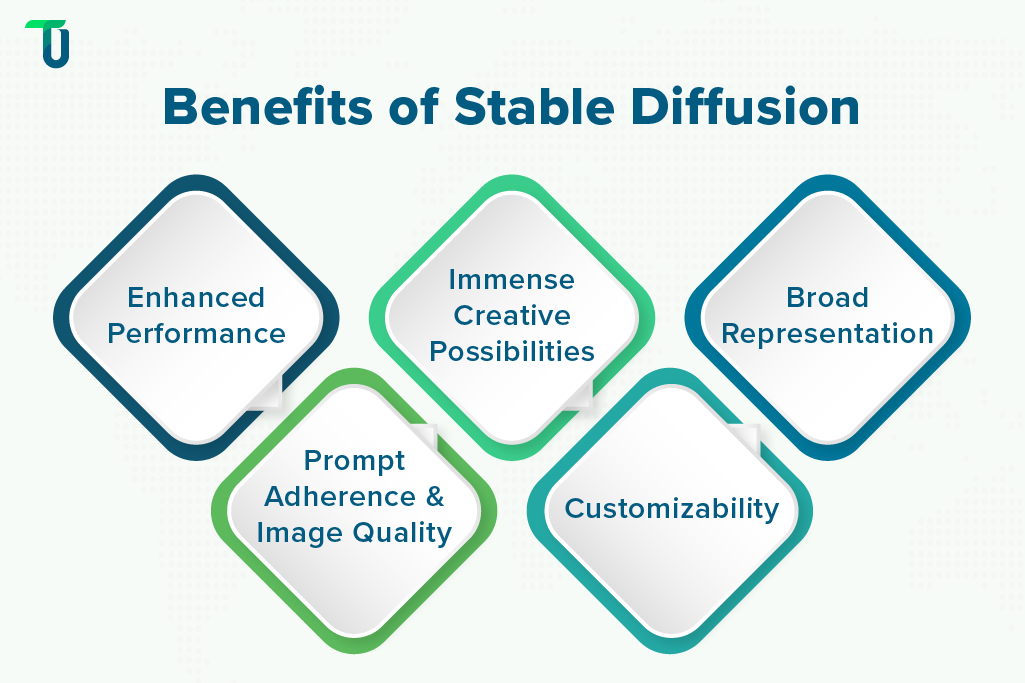

With a variety of models and versions of Stable Diffusion available to meet different needs, here are some key advantages this technology provides:

Enhanced Performance: Unlike other open-source image generation models, Stable Diffusion 3.5 Medium is compatible with most consumer GPUs and needs only 9.9 GB of VRAM.

Immense Creative Possibilities: Stable Diffusion can produce an array of visual styles, like line art, photography, painting, 3D, and any other style that the user can imagine.

Broad Representation: It can generate images showcasing a variety of skin tones, features, and world identities without requiring extensive input.

Prompt Adherence & Image Quality: Stable Diffusion 3.5 is highly competitive with other large models in inference speed, prompt adherence, and the image quality it generates.

Customizability: Developers can build domain or niche-specific applications to meet specific requirements by tailoring workflows and fine-tuning the Stable Diffusion model.

Despite several benefits for varied use cases, the Stable Diffusion model has some limitations. They include varying image quality for different image size requirements, inaccuracies and biases due to insufficient and low-quality training data, and limitations in interpreting different languages apart from its training language English.

AI developers can overcome these limitations while building custom solutions by applying different fine-tuning methods, like LoRA, DreamBooth, etc., based on their intended use cases.

Build Stable Diffusion-based Custom AI Solutions with TenUp

Stable Diffusion has transformed the way images are created for commercial purposes by powering AI-based tools and platforms that help businesses create high-quality visuals. As this technology continues to advance, integrating custom solutions into business operations can provide time and cost benefits in design and digital media generation and enable endless creative possibilities for innovation.

Tailoring it for your intended use cases is key to benefiting from this model. Here’s where our experienced AI developers can help. As a part of our AI engineering services, we can fine-tune the Stable Diffusion model and build a custom AI solution that integrates with your existing infrastructure and provides accurate outcomes. Please explore our varied case studies, which show how we have helped clients across industries get result-oriented, high-performance AI-based solutions for their specific requirements.

Need Automated Image Generation Capabilities?

We can build custom AI solutions by fine-tuning a Stable Diffusion model to enhance your niche, creative processes.

Frequently asked questions

What is Stable Diffusion, and how is it different from other AI image generation models?

Stable Diffusion is an open-source, Latent Diffusion Model (LDM) introduced in 2022. Unlike DALL-E or Imagen models, using high-dimensional Image Space, Stable Diffusion generates high-quality images in compressed Latent Space, making it computationally efficient.

How does Stable Diffusion work for text-to-image generation?

Stable Diffusion uses Forward Diffusion, Reverse Diffusion & Variational Autoencoder (VAE) to generate images. When user provides text prompt, the model converts text into numerical tokens using a CLIP tokenizer. These tokens guide Noise Predictor (U-Net) to remove noise from latent image representation. The VAE decodes this latent representation into a high-resolution image.

What are the key business benefits of using Stable Diffusion?

Stable Diffusion offers many business benefits, including:

- Cost Efficiency: Reduces the need for expensive photo shoots or design resources.

- Creative Flexibility: Generates diverse visual styles (e.g., 3D, photography, painting).

- Customizability: Can be fine-tuned for niche-specific applications.

- Speed and Performance: Operates efficiently on consumer GPUs, accessible for all businesses.

- Broad Representation: Generates images with diverse features, skin tones, and cultural.

What are the limitations of Stable Diffusion, and how can they be addressed?

While Stable Diffusion is powerful, it has some limitations:

- Image Quality Variability: Output quality can vary based on prompts and image size.

- Bias in Training Data: May produce biased or inaccurate results due to data issues.

- Language Limitations: Works best with English, struggles with other languages.

These can be addressed with fine-tuning methods like LoRA or DreamBooth to improve performance and adaptability.

What industries can benefit from Stable Diffusion, and how?

Stable Diffusion has applications across various industries, including:

- Marketing and Advertising: Creating visuals, social media posts, and product images.

- Fashion and Apparel: Designing variations and digital prototypes.

- Entertainment and Media: Generating concept art, storyboards, and animations.

- Product Design: Visualizing concepts and speeding up ideation.

- Medical Research: Converting data into visuals and enhancing medical imaging.

How can businesses integrate Stable Diffusion into their workflows?

Stable Diffusion has applications across various industries, including:

- Custom AI Solutions: Partnering with developers to fine-tune for specific use cases.

- API Integration: Using platforms like DreamStudio or NightCafe for access.

- In-House Development: Building tools with Stable Diffusion’s open-source code.

- Training and Adoption: Educating teams on effective use for creative tasks.

What are some real-world use cases for stable diffusion?

- Art & Design – AI-generated artwork and graphic design.

- Marketing & Ads – Unique visuals for branding and campaigns.

- Data Augmentation – Creating synthetic images for AI training.

- Makeup Transfer – Applying realistic makeup styles to images.