What is LLM Fine-Tuning and Why is it Needed for Custom AI Software Development?

While pre-trained foundation language models perform well on generalized NLP-based tasks, they lack the specificity and detail required to perform your business-specific tasks. Fine-tuning involves further training these already trained LLM models using your business-specific data so they perform your domain or niche-specific tasks better.

Let’s look at the example of an LLM-based AI solution used for Legal Contract Review and Compliance Monitoring. The foundation LLM utilized for developing this solution must be fine-tuned to make it understand the nuanced language of legal documents, terminology, structure, and other essential requirements.

An LLM trained in risky contracts and capable of categorizing clauses after understanding complex legal language can better detect risks or unusual clauses in contracts. In comparison, a foundation LLM pre-trained in general content will most probably hallucinate when encountering legal nuances and provide incorrect or irrelevant responses. That’s why re-training your chosen foundation LLM using niche data is important for developing personalized AI solutions tailored to your business needs.

When Fine-Tuning an LLM Drives the Best Business Outcomes

To Perform Custom Tasks: As discussed earlier with example, for the LLM to perform specific tasks requiring domain or niche knowledge, it must be fine-tuned using custom data. Fine-tuning also helps improve LLM performance on tasks like Sentiment Analysis , Question Answering, Text Summarization, Customer Support, Content Generation, Named-Entity Recognition, Paraphrase Identification, and many more.

To Improve Accuracy: Let’s look at a common example of a customer service chatbot. A general, pre-trained language model will handle customer queries quite well as it can understand general language patterns, grammar, and vocabulary.

Suppose, a medical equipment company uses this LLM to respond to customer queries.

Customer: “What is the warranty on your X-ray machine?”

General LLM: “These products come with a 12-month warranty. Please check the product documentation or contact customer service for specific information.”

While this response is reasonable, it's also generic.

The company would want to respond something like this- “Our Alpha Series X-ray machines come with an extended 18-month warranty that starts from the date of installation and covers defects in materials and workmanship. Please refer to our warranty guide or contact customer support for further assistance.”

The pre-trained LLM can learn to respond with this level of detail and accuracy only after it is fine-tuned or further trained using the company’s customer service transcripts, product manuals, company policies and guidelines, and other relevant in-house content.

To Ensure Data Security and Privacy: Ensuring the security and confidentiality of data is critical for businesses handling sensitive information in industries like finance, healthcare, legal, etc. At times, very large language models have already learned sensitive information, thanks to their extensive training on sources scrapped from the internet. Fine-tuning helps train the model on what to share and what not to share. Timely completing specific fine-tuning processes also helps counter regulatory changes and potential security threats.

You can fine-tune LLMs in controlled environments like on-premise or secure cloud to ensure your data complies with regulations and is not shared with third parties. Ensure your proprietary data is not leaked by utilizing encryption and access control. To fine-tune your LLM so that it does not share sensitive information while responding to customer questions, you can use data sanitization techniques or privacy filters.

Now that we’ve established the critical role of fine-tuning your general LLM to ensure the accuracy of your custom AI solutions, let’s understand the most effective methods to do so.

Need a Fast, Cost-effective Yet Accurate AI Solution?

Explore our custom solutions development services that guarantee tangible results and provide accurate outcomes.

Mastering Fine-Tuning LLM Methods: Choosing the Right Approach

Fine-tuning basically includes using a new dataset and adjusting the parameters of a pre-trained LLM to improve its performance on a specific task. At times, traditional fine-tuning techniques are not even feasible given the enormous size of LLMs (hundreds of GBs) consisting of billions of parameters. They require massive computational power, storage capabilities, time, and costs making them impractical for everyone.

With this, we can broadly categorize fine-tuning methods into:

- Full Fine-tuning

- Parameter Efficient Fine-tuning (PEFT)

While Full Fine-tuning adjusts all the parameters of the LLM as discussed above, PEFT adjusts just a few strategically selected parameters to improve performance for a specific task. So, it retains the LLM’s previously learned information and makes it specialize in a niche task by providing domain information. It's like graduating in software engineering and later specializing in AI engineering with an additional course.

This approach reduces time, costs, computational power, memory usage, and storage requirements, eliminating entry barriers for small but customized AI solutions. As PEFT is a feasible LLM fine-tuning method, we’ll focus on it and discuss two PEFT-based fine-tuning techniques- LoRA and QLoRA.

1. LoRA (Low-Rank Adaptation)

Aimed at making minimal changes to the LLM parameters, Low-Rank Adaptation (LoRA) is a refined LLM fine-tuning method introduced in 2021. It adjusts weights for a small subset of LLM parameters having maximum impact on the desired task.

It focuses on fine-tuning two smaller matrices that approximate the larger weight matrix of the pre-trained LLM. These matrices constitute the LoRA Adaptor, which is smaller in size (in a few MBs).

So, the result of this fine-tuning method is the original LLM plus the LoRA Adaptor, which is much smaller in size compared to the result of Full Fine-tuning (a new version of the LLM), which is very big and consumes high memory. The benefit is that different LoRA Adaptors fine-tuned on varied use cases can re-use the original LLM, reducing memory requirements while executing different tasks. So, the LLM retains its original training and upskills with the fine-tuning data.

2. QLoRA (Quantized Low-Rank Adaptation)

QLoRA is an extended version of LoRA that quantizes the weights of LLM parameters. In simple terms, the QLoRA LLM fine-tuning technique compresses the storage of LLM parameters from the 32-bit format in LoRA to merely the 4-bit format. By reducing the memory footprint in this manner, it helps fine-tune an LLM using a single GPU , thus saving on computational power, storage infrastructure, cost, and time. Combining a low-precision storage method with a high-precision computing technique, QLoRA ensures high accuracy and performance of your LLM even while keeping its size small.

LoRA vs QLoRA: Choosing the Best Fine-Tuning Method for Your Needs

As both LoRA and QLoRA are commonly used LLM fine-tuning methods or techniques, let’s understand their key differences and when to use them:

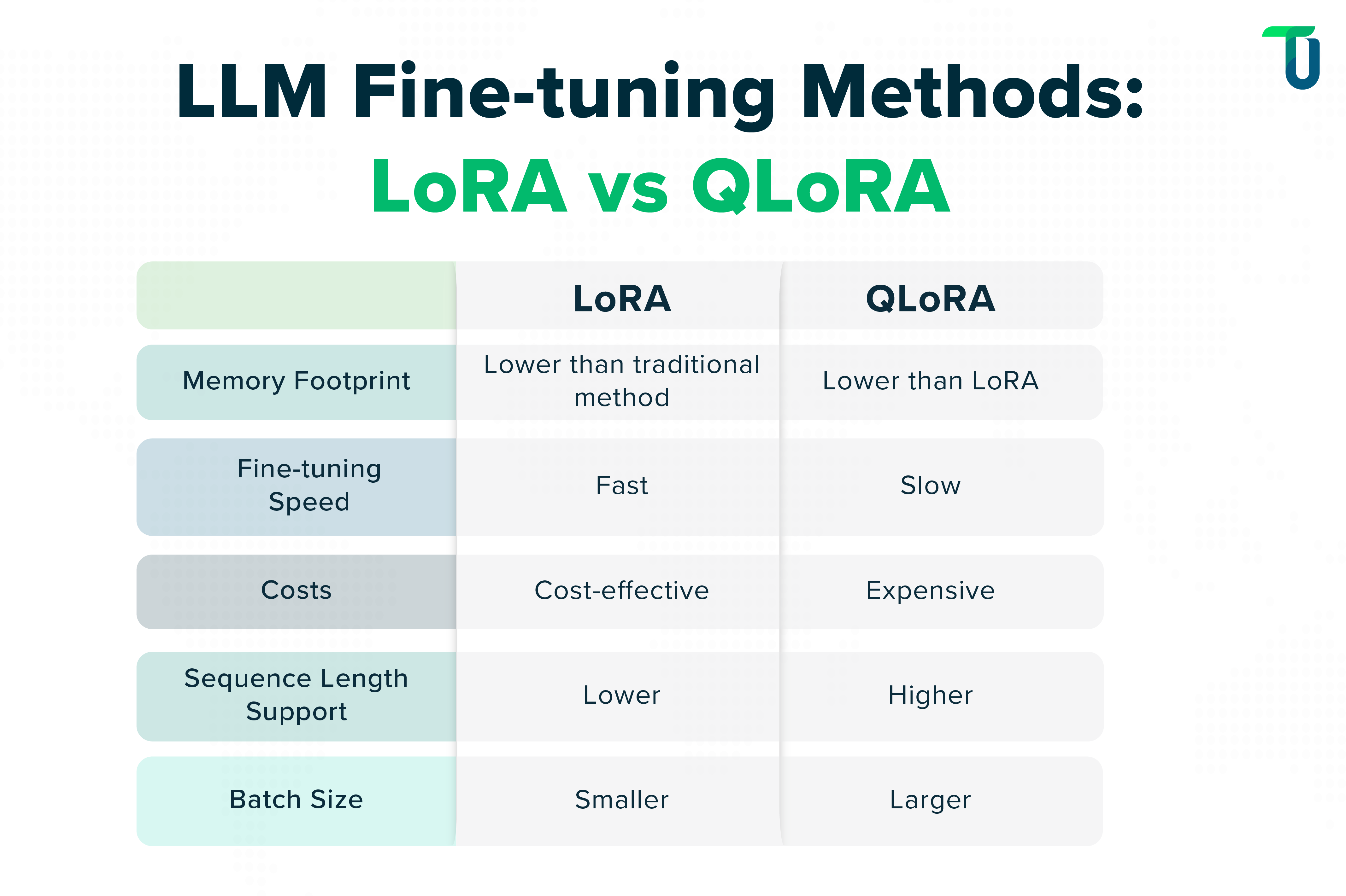

Memory Footprint: LoRA significantly reduces memory footprint and storage requirements as compared to traditional fine-tuning methods. QLoRA further reduces memory footprint using the quantization technique. We recommend using QLoRA when the lowest memory footprint and storage requirements are your primary priority while fine-tuning a pre-trained LLM.

LLM Fine-tuning Speed: An estimate by Google Cloud suggests that fine-tuning an LLM using LoRA is 66% faster than QLoRA. It’s because while QLoRA reduces memory footprint, it increases process complexity, thus reducing speed. So if fast LLM fine-tuning is your fundamental requirement, go for LoRA.

Costs: As quantization overheads increase with QLoRA, using LoRA is approximately 40% less expensive. It makes LoRA a more cost-effective choice when fine-tuning your foundation LLM.

Sequence Length Support: When the complexity and requirements of NLP tasks increase, so does the length of sequences that need to be processed during LLM fine-tuning. As GPU memory consumption is lower with QLoRA, it supports higher max sequence lengths, making it more suitable for fine-tuning very large language models handling complex tasks.

Batch Size: QLoRA supports larger batch sizes as compared to LoRA. So, it's the right choice when you want to scale LLM fine-tuning without increasing resources.

You can select both Open and Closed Source foundation LLMs for fine-tuning and use the right tools, methods, and techniques for the same. As for tools, using Open Source tools like Github’s Llama Factory and Unsloth helps fine-tune varied language models including Llama, Phi, Gemma, etc. For Closed Source models like OpenAI’s GPT models, you can utilize their platform which provides features for fine-tuning.

Key Takeaway: Enhance Custom AI Solutions with Fine-Tuning LLM

Fine-tuning educates your chosen foundation LLM on the nuances of your niche or domain and helps it perform better on specific tasks based on the use cases your AI solution addresses. It also helps you overcome data security and compliance challenges. So, even if your business deals with sensitive customer information, you can still build a highly secure AI solution providing accurate outcomes using LLM fine-tuning.

As an experienced custom AI services and solutions provider, we have helped several businesses build custom solutions tailored to their niche business requirements. Please check out our case studies demonstrating how we have delivered successful solutions to our clients.

Need AI-based Solutions to Retain a Competitive Edge?

Our skilled and experienced AI development team will design and develop innovative solutions tailored to your niche requirements.