Apache Airflow: The Ultimate Tool for Data Pipeline Management

Apache Airflow is an open-source platform designed for programmatically authoring, scheduling, and monitoring workflows. It provides a robust framework for managing and orchestrating complex data pipelines. With Apache Airflow, users can define tasks and their dependencies as Directed Acyclic Graphs (DAGs), allowing for a visual representation of the workflow structure.

At its core, Apache Airflow simplifies managing and automating various data-related tasks. It offers a unified interface that enables users to define, execute, and monitor workflows seamlessly. By utilizing the power of DAGs, Apache Airflow ensures that tasks are performed in the correct order, handling dependencies efficiently and enabling parallel execution when possible.

The platform has various features contributing to its effectiveness in data pipeline management. These features include advanced scheduling capabilities, task dependency management, error handling, and a web-based user interface for monitoring and tracking the progress of workflows.

Overall, Apache Airflow empowers users to design, automate, and efficiently manage their data pipelines. Its versatility and powerful features make it a valuable tool for orchestrating complex workflows, ensuring seamless data flow, and enabling efficient data integration and processing.

Key Features of Apache Airflow for Workflow Automation

-

DAGs (Directed Acyclic Graphs):

Apache Airflow uses DAGs to represent workflows as a collection of tasks and their dependencies. This feature enables the visualization and easy understanding of the data pipeline structure, making it simpler to manage and troubleshoot. -

Task Dependency Management:

Apache Airflow allows defining dependencies between tasks, ensuring that each task executes in the correct order. This feature facilitates efficient data flow and enables parallel execution of tasks, enhancing overall pipeline performance. -

Scheduling and Monitoring:

Apache Airflow offers a robust scheduling mechanism, allowing users to define when and how often each task should run. Additionally, it provides a web-based user interface for monitoring and tracking the progress of workflows, enabling proactive management and troubleshooting.

What is a Data Pipeline?

A data pipeline is extracting, transforming, and loading (ETL) data from various sources into a unified format for analysis or storage. It involves a series of related tasks that perform specific operations on the data, such as data extraction, transformation, validation, and loading. Data pipelines play a vital role in ensuring the seamless flow of information for real-time data processing and analytics.

Essential Features of a High-Performance Data Pipeline

-

Data Integration:

Data pipelines are data integration tools allowing organizations to combine data from multiple sources, such as databases, APIs, and file systems. They enable seamless data flow across different platforms, ensuring consistency and accuracy. -

Real-Time Data Processing:

Data pipelines with Apache Airflow support real-time data processing, enabling organizations to handle streaming data and react to events as they occur. This capability is precious in scenarios requiring real-time insights and immediate actions. -

Automated Data Integration:

Data pipelines automate the data integration process, reducing manual effort and minimizing errors particularly in complex financial workflows like sales tax reporting automation using a Stripe app. Organizations can save time, improve efficiency, and maintain data integrity by automating data extraction, transformation, and loading tasks.

Experience Seamless Data Integration - Contact TenUp Today!

Ready to embark on a seamless data integration journey? Contact TenUp to leverage their expertise in Apache Airflow and unlock the benefits of streamlined data pipelines. Experience the power of real-time data processing, automated integration, and scalable solutions.

Overall, Apache Airflow empowers users to design, automate, and efficiently manage their data pipelines. Its versatility and powerful features make it a valuable tool for orchestrating complex workflows, ensuring seamless data flow, and enabling efficient data integration and processing.

As one of the many powerful cloud data engineering tools, Apache Airflow plays a crucial role in ensuring that data pipelines are scalable, automated, and efficient. These tools are designed to support organizations in managing vast amounts of data with ease. Learn more about the broader benefits of cloud data engineering tools for scalable data processing and how they complement platforms like Apache Airflow.

How to Build Scalable Data Pipelines Using Apache Airflow

-

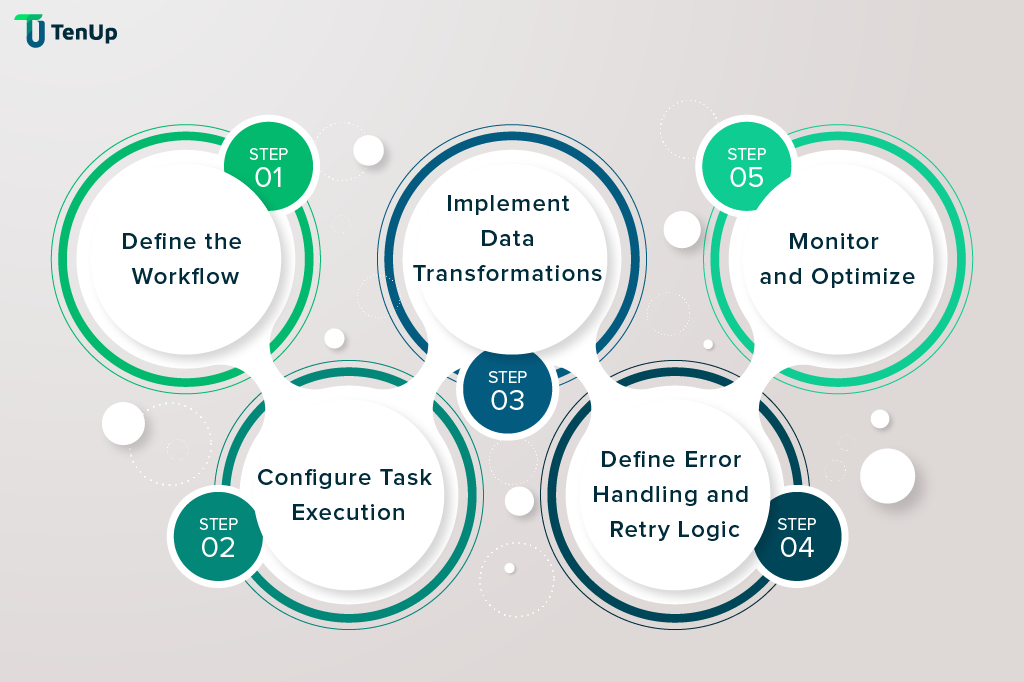

Define the Workflow:

Identify the tasks involved in the data pipeline and their dependencies. Use Apache Airflow's DAG concept to represent the workflow visually. -

Configure Task Execution:

Specify the execution parameters for each task, such as the frequency, start time, and resource requirements. Leverage Apache Airflow's scheduling capabilities to ensure optimal task execution. -

Implement Data Transformations:

Use Apache Airflow's operators and hooks to implement data transformations, such as filtering, aggregating, or joining datasets. These transformations enable data cleansing and preparation for downstream analysis. -

Define Error Handling and Retry Logic:

Incorporate error handling mechanisms, such as retrying failed tasks or sending notifications when errors occur. Apache Airflow provides built-in features for managing task failures and retries. -

Monitor and Optimize:

Continuously monitor the performance of your data pipeline using Apache Airflow's web-based interface. Identify bottlenecks, optimize task execution, and fine-tune the workflow for improved efficiency.

Why Apache Airflow is the Best Choice for Data Pipelines

-

Scalability:

Apache Airflow's distributed architecture allows scaling data pipelines to handle large volumes of data and accommodate growing data integration needs. -

Flexibility:

With its extensive library of pre-built connectors and operators, Apache Airflow supports many data sources and tools, offering flexibility in designing data pipelines. -

Reproducibility:

Apache Airflow ensures the reproducibility of data pipelines by maintaining a historical record of executed workflows, making tracking and reproducing results easier. -

Collaboration:

Apache Airflow provides a collaborative environment for multiple teams to work on data pipelines simultaneously, promoting efficient collaboration and knowledge sharing.

Final Thoughts: Enhancing Data Pipeline Management with Apache Airflow

Apache Airflow has emerged as a powerful solution for efficient data pipeline management, empowering organizations to streamline their data integration processes and gain valuable insights. With its key features like DAGs, task dependency management, and scheduling capabilities, Apache Airflow simplifies the design, execution, and monitoring of complex data pipelines.

By harnessing the benefits of data pipelines with Apache Airflow, organizations can enhance their data-driven decision-making processes, achieve real-time data processing, and automate data integration tasks for improved efficiency and accuracy.

Supercharge Your Data Integration Efforts - Connect with TenUp Now!

Ready to elevate your data integration capabilities? Partner with TenUp for expert data engineering services and unlock the full potential of Apache Airflow. We can help you harness the power of real-time data processing, automated workflows, and seamless integration. Learn more about our data engineering services here!