#EdgeComputing#Serverless

Infrastructure as Code (IaC) is one of the most important DevOps practices in use today, alongside continuous delivery. Changeless infrastructure, faster time-to-market, scalability, cost efficiency, and risk mitigation are some of the reasons why IaC has gained popularity over the years. As the number of production and delivery cycles increases, the use of IaC tools has transformed the way engineers design, test, and release applications.

Data is the driving force behind modern businesses, offering valuable insights and real-time control over critical business operations. However, this deluge of data is also changing the way businesses look at computing.

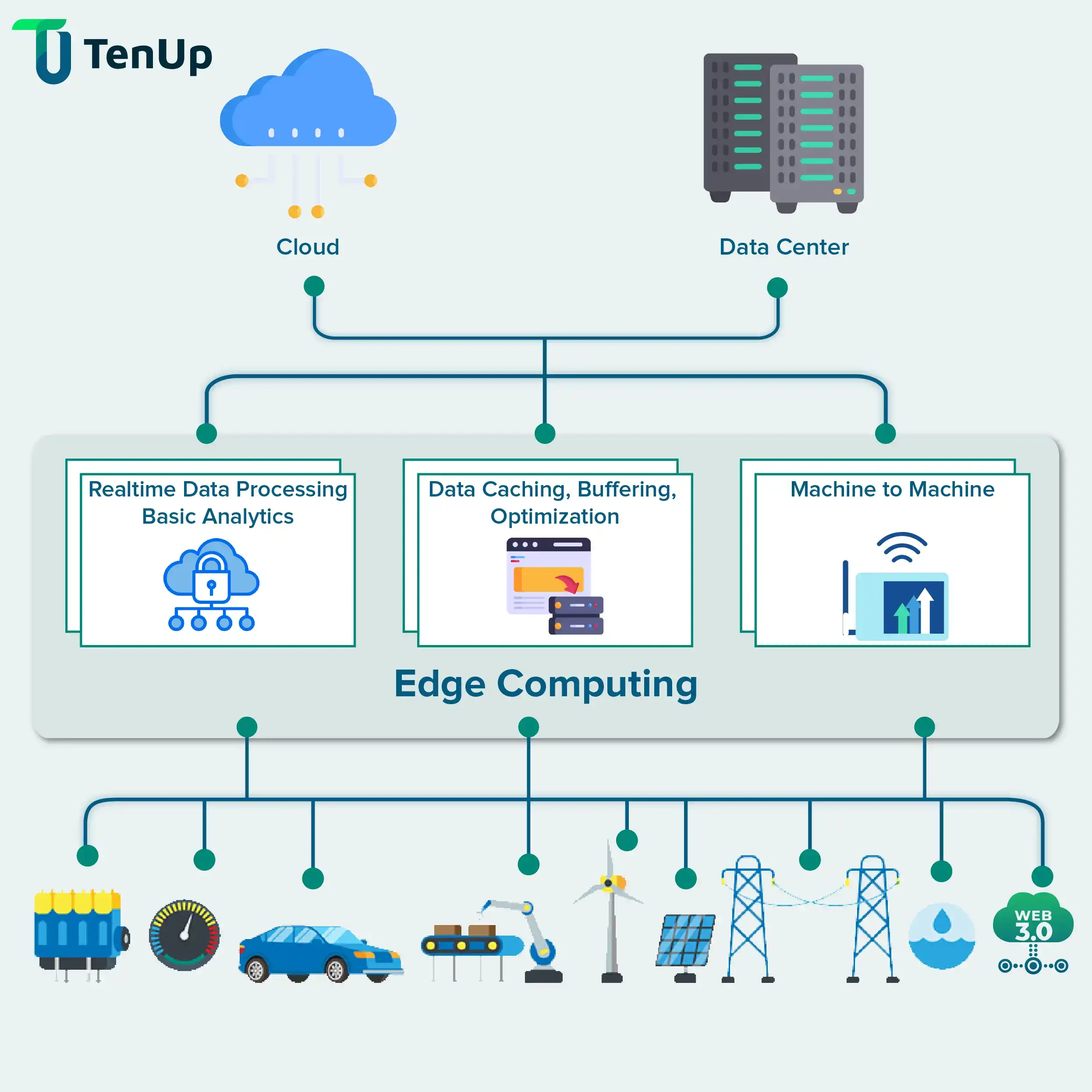

The traditional computing model was built on a centralized data center. But the internet as we know it, cannot handle the never-ending streams of real-world data. From availability and latency challenges to unpredictable disruptions and bandwidth issues, there are many problems with the existing computational model. One of the ways in which the availability and latency questions can be resolved is by using edge computing, where the computation is actually performed closer to the point where data is collected.

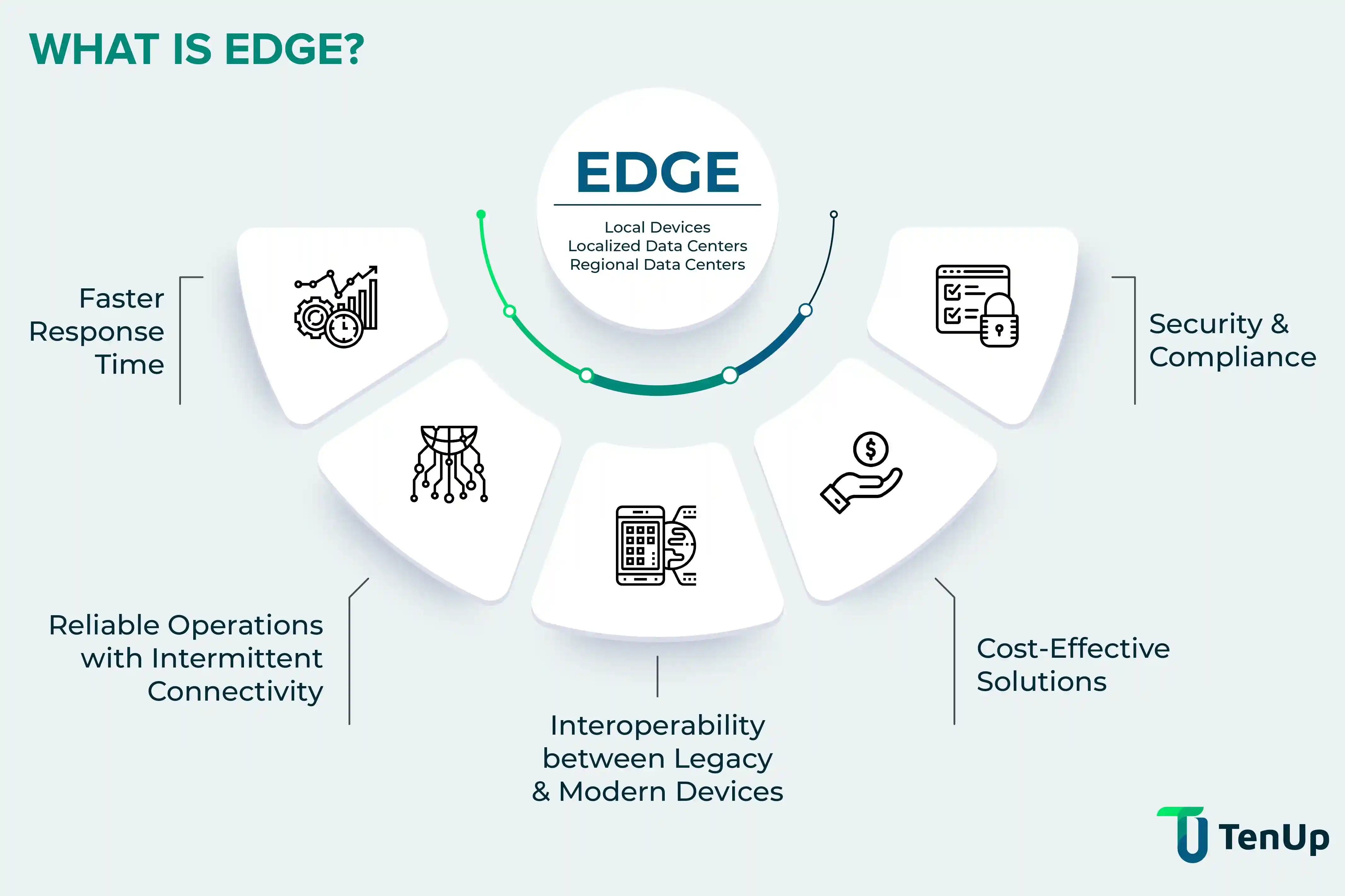

Edge computing is defined as a distributed IT architecture where client data is processed at the periphery of the network, as close to the originating source as possible. In simpler terms, edge computing moves some part of the storage and compute resources out of the central data center and closer to the data source itself—whether that’s a retail store, a factory floor, or a smart device. With the rise of serverless edge computing, this process has become even more efficient, allowing developers to run code at the edge without managing infrastructure.

Serverless edge computing takes this a step further by enabling real-time processing at the edge without the need for complex infrastructure management.

As described earlier, edge computing is all about the location. In traditional computing, data is produced at a client location, say for example, a user’s computer. This data then moves across a wide area network such as the Internet via the corporate LAN, where the data is stored and processed by an enterprise app. The results are then communicated back to the client location. This model worked well for a long time for the most typical business applications.

However, traditional infrastructure is no longer adequate to accommodate the growing number of devices connected to the internet and the volume of data that is produced by each device. The idea of moving vast amounts of data can cause delays, or disruptions, not to mention put a tremendous strain on the internet.

So architects came up with the idea of edge computing – moving the focus from the central data center to the logical edge of the infrastructure. If you can’t take the well to the horse, bring the horse to the well. In other words, get the data center closer to the data.

The most important usage of serverless edge computing is for real-time and instant data processing. Where the cloud focuses on volume and “big data”, the focus shifts to real-time and instant data as we move to serverless edge.

Edge computing is really about reduction – in traffic, bandwidth, distance, energy, latency and power. The latency reduction is however highly promising for applications in IoT, AI, and ML. For example, real-time instruction of autonomous devices, remote surgery, and facial recognition. These are just a few examples where edge computing can be leveraged to get the optimum benefits.

The growth of edge computing is further accelerated by the emergence of technologies such as 5G and faster connectivity. Edge computing and 5G combined can reduce the latency down to previously unimagined levels, making way for a new and exciting range of use cases.

The network edge, edge network, or edge networking is a paradigm that brings computation and data storage as close to the point of request as possible to deliver low latency and improve bandwidth efficiency.

Internet of Things (IoT) devices and networking infrastructure benefit significantly from a proximity to data source because it enhances throughput. Building an edge computing network is achieved by decentralizing data centers and exploiting smart objects and network gateways to deliver services. Specialized branch routers and network edge routers located on the boundary of the network use dynamic or static routing via Ethernet to send or receive data.

The main benefit of edge computing network architecture is the conservation of resources by offloading network traffic. Amazon’s CloudFront edge networking service provides content to users with low latency by leveraging a global network of edge locations. This includes 205 edge locations and 11 regional edge caches in 42 countries.

You may have heard of edge handlers in the context of edge computing. Edge Handlers developed by Netlify are the first of its kind solution that bring edge computation to a single git-based workflow to streamline continuous delivery between frontend developers and DevOps. Before edge handlers, it wasn’t possible to run serverless computing at the network edge. It needed extensive team coordination and edge logic tied to several dependencies. Using edge handlers such as Netlify, developers write simple code that builds and deploys to the network edge, bringing together complex streams into a single workflow on a common Git repository.

Edge Handlers simplify the developer experience by making it easy to manage the frontend workflow, providing personalization at the edge, and offering detailed logs and monitoring and validation.

This is a prime example of serverless edge computing in action.

Lambda@Edge is a feature by Amazon CloudFront that enables running code closer to the application users to improve performance and remove latency. Using Lambda@Edge, you don’t need to provision or manage infrastructure in multiple locations globally. The additional benefit is that enterprises only pay for the computation time they use. The additional advantage is the ability to customize content delivery through CloudFront CDN, modifying compute resources and execution time based on application performance demands.

While there are hundreds of examples and use cases of edge computing, we have chosen some popular use cases that resonate easily:

The shift from cloud computing to serverless edge computing also necessitated core technologies to support the needs of decentralized edge computing topology. Edge databases address this need, making it easy for developers to implement serverless edge solutions swiftly. For example, Workers KV is a highly distributed, eventually consistent, key-value store that spans Cloudflare's global edge.

The future of edge computing has evolved considerably with aggressive adoption during the pandemic, driving agility and innovation. There are multiple delivery models in edge computing, ranging from ‘build your own stack’ to business-outcome-based edge computing as a service. One emerging model gaining significant traction is serverless edge computing, which allows businesses to run applications with minimal infrastructure management, leveraging the power of distributed resources.

The biggest advantage of edge computing, including serverless models, is speed and reduced latency. In addition, businesses benefit from improved security and privacy, reduced operational costs, scalability, and reliability. While the initial aim for edge computing was to reduce bandwidth costs and latency, the rise of real-time apps that require local processing and storage capabilities is fuelling the growth of this technology, especially when combined with serverless architectures that offer scalability without the complexity.

Looking to leverage edge computing for your business? Our offer services to build custom cloud solutions that can help you get scalable, secure, and efficient edge computing solutions tailored to your needs. Get in touch today to explore how we can help you stay ahead with cutting-edge cloud and edge technologies.