The Role of RAG Systems in Enhancing LLM-based Generative AI

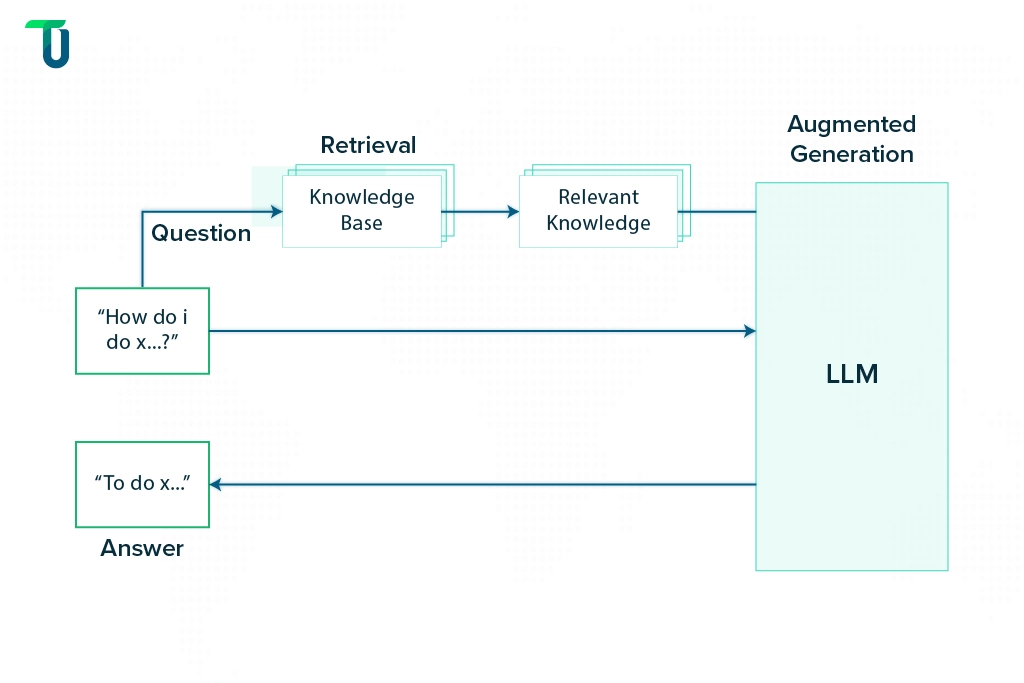

LLMs are Generative Models capable of providing detailed, human-like responses. But they need relevant and up-to-date information to generate factually correct and useful responses. Here comes the role of RAG systems.

Please read our blog titled Unlocking LLM Potential: How RAG Delivers Accurate and Adaptable AI to understand how RAG systems help LLMs improve the performance of Gen AI systems like Question Answering Systems, Chatbots, etc.

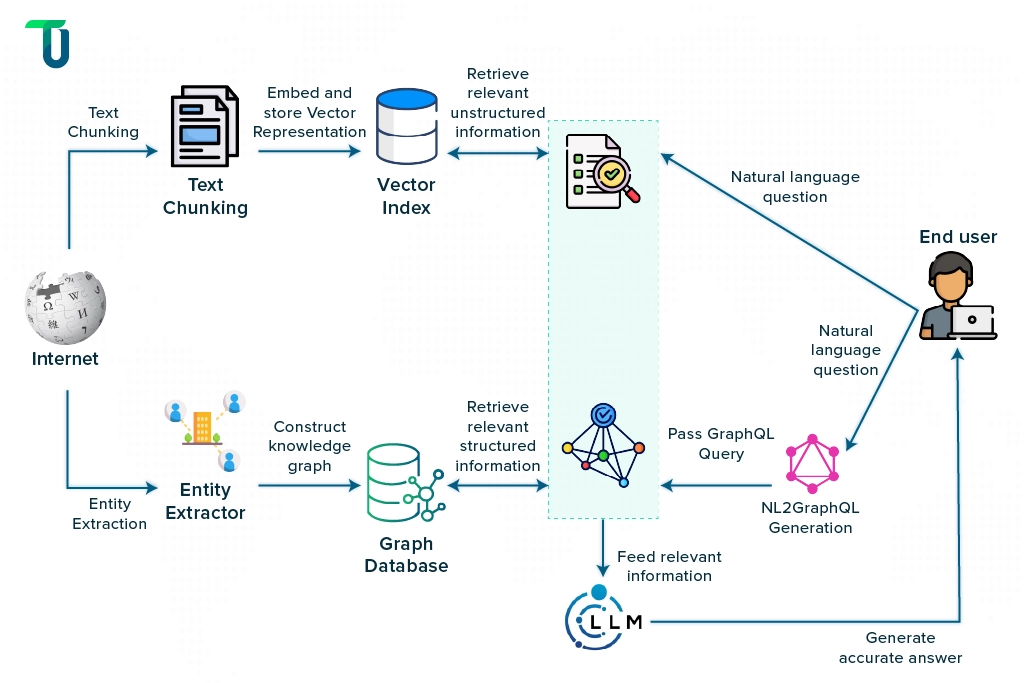

Refer to the above image showing that when the RAG system receives a query, it retrieves relevant knowledge from the database and feeds it to the LLM to enable it to respond appropriately.

Vector Databases in RAG Systems: Understanding the Original Concept

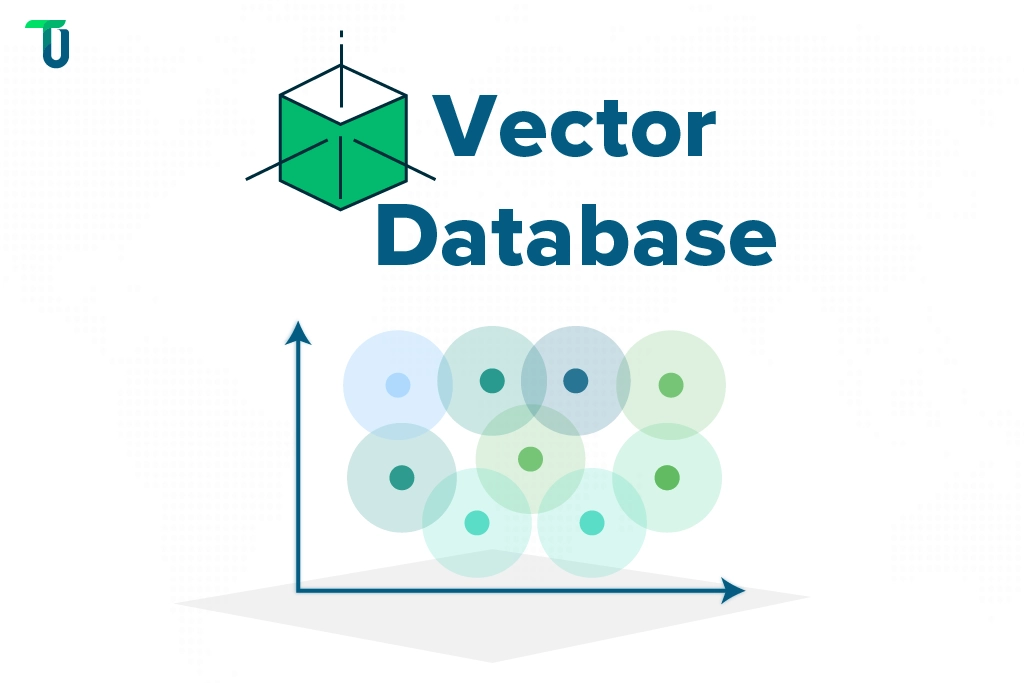

RAG systems originally used vector databases (called Baseline RAG). Vector databases allow storing, indexing, and querying high-dimensional vector embeddings (numerical representations) generated from unstructured data like text documents, images, or audio. The purpose of a vector database is to capture the semantic meaning and features of unstructured data and transform it into manageable chunks of vector embeddings, as shown in the below image. This preserves important concepts and enables fast similarity searches.

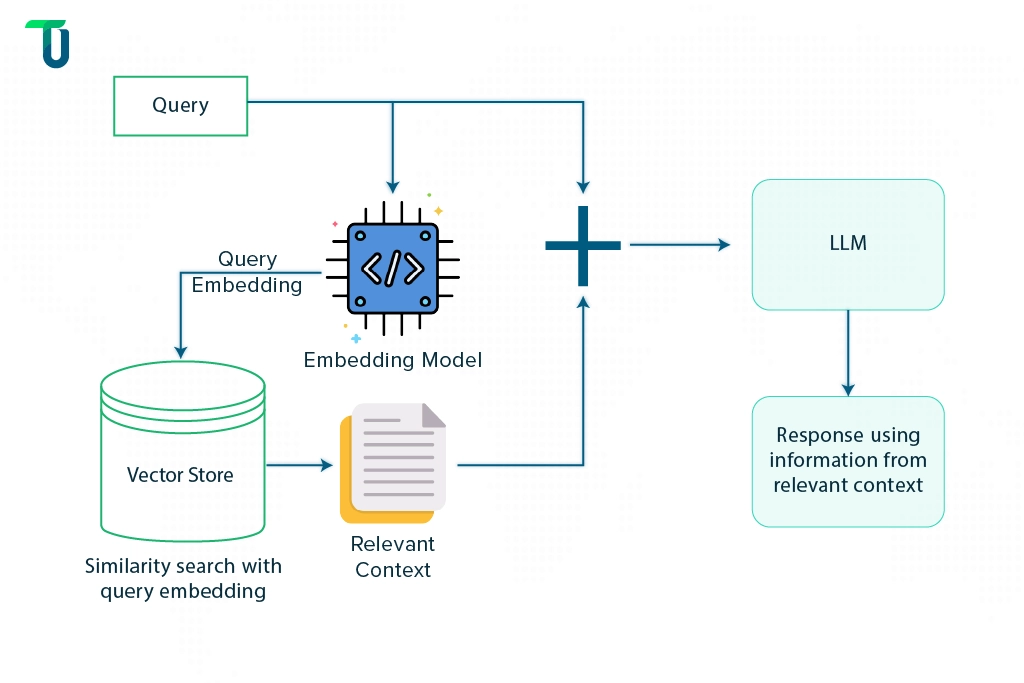

The below diagram shows the workflow of a Vector-based RAG system. The RAG system transforms the user query into vectors using an embedding model. It then applies similarity search to match these query vectors against the information vectors stored in the database. After identifying and retrieving the most relevant pieces of information, it feeds them to the LLM. Using the original query and the data retrieved by RAG, the LLM finally creates a relevant response.

Going back to our earlier example about researching ‘Global Warming’, vector-based retrieval works best for this use case. It's because the RAG system can retrieve information on related terms like Climate Change, Greenhouse Effect, etc., using similarity search and provide a better context to the LLM.

Key Benefits of Using Vector Databases in RAG Systems

Even though this is a traditional approach to building RAG systems, it offers significant advantages:

Speed: Fast similarity search capabilities for unstructured data make it ideal for real-time applications.

Accuracy: Semantic search ability takes it a step ahead of traditional similarity searches and helps understand meaning and context to retrieve relevant data.

Scalability: It can manage high data volumes by handling billions of vectors effectively, making it suitable for applications requiring extensive data processing.

Challenges and Limitations of Vector-based RAG Systems

Here are some of the challenges associated with using only a vector database in RAG system:

Inability to Understand Relationships and Hierarchy in Data: Vector databases are basically unstructured as they embed unstructured information and store as chunks for similarity matching. So, they lack the structure of a relational or hierarchical database, making it difficult to understand relationships in data beyond vector similarities.

Complexity: For domain-specific tasks, the model must be adjusted to enable the vector embeddings to optimally understand the meaning or context of data (called Vector Encoding). Also, specific data structures must be created for optimal indexing of vectors, enabling faster retrieval. Handling these complexities requires specialized knowledge.

Need to Automate Customer Support With Gen AI?

We build comprehensive solutions to ensure fast, relevant knowledge retrieval and contextually correct automated responses to ensure customer satisfaction.

Graph Databases in RAG Systems: Advancing AI Performance

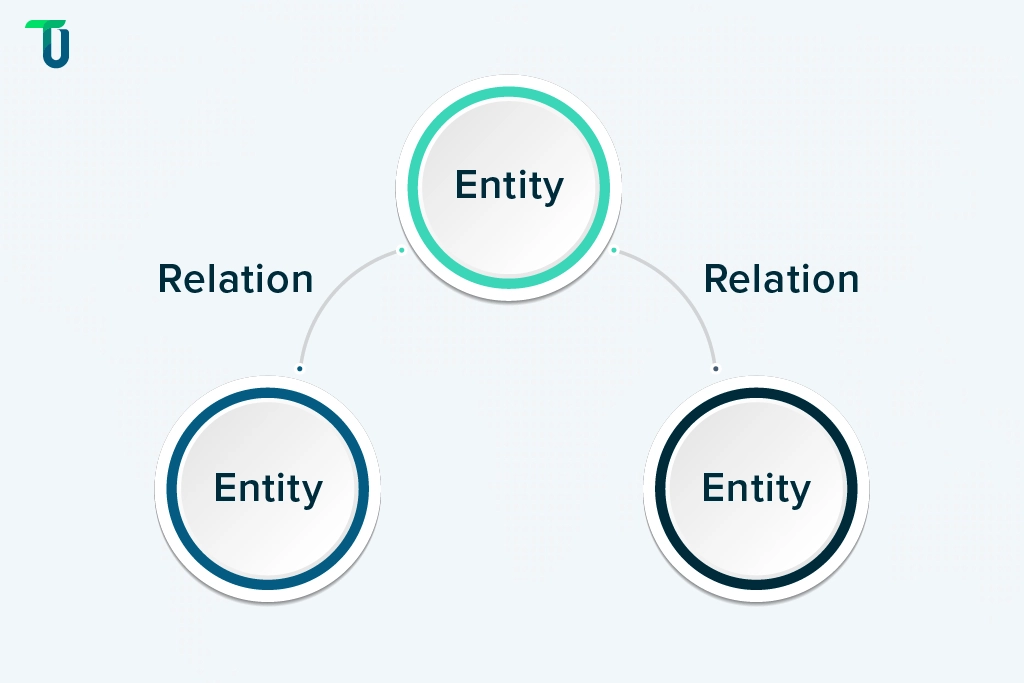

Popularly called Graph RAG or GRAG, this concept of using Knowledge Graphs in RAG systems helps overcome the challenge of understanding relationships and hierarchy in data. A knowledge graph organizes data in a semantic structure by connecting information through ‘Nodes’ and ‘Edges’. Nodes represent entities like people, places, or concepts, whereas Edges show the relationships between those entities. This mimics how humans often reason and connect information using relationships, instead of treating information as isolated groups of words.

As the above image shows, the knowledge graph is a chain of relationships between different entities allowing sophisticated reasoning to answer complex queries. So, RAG systems utilizing graph databases are ideal for use cases that require the AI system to understand the relationships between different entities.

In our previous example of buying a leather jacket online, the information retrieved from the graph database helps the recommendation system understand the relationship between materials. This enables it to recommend purchasing a leather-specific brush instead of a lint brush for cleaning the jacket, thus delivering more context-relevant results.

How Knowledge Graphs Enhance RAG Systems: Key Benefits

The introduction of Graph RAG offers the following advantages to LLM-based systems:

Multi-hop Reasoning: Knowledge Graph allows information retrieval for complex queries by following a chain of relationships. It helps the RAG system provide richer context, which improves the depth and relevance of the content generated by the LLM.

Comprehensive Knowledge Representation: Graph RAG can integrate structured data like a knowledge graph with unstructured information like text documents to achieve more accurate knowledge representations. So, it pulls precise facts from structured sources and detailed context from unstructured data to allow deeper, more context-aware insights in applications like Chatbots and Virtual Assistants.

Improved Explainability: Using structured knowledge graphs improves the traceability of the reasoning process used by the RAG system to retrieve relevant information. The ability to ensure the system’s outputs are based on logical and verifiable connections enhances its transparency and trustworthiness.

Exploring the Limitations of Knowledge Graph-based RAG Systems

Even this approach to building RAG systems is not without its unique challenges. They include:

Performance: Retrieving information could involve navigating through multiple interconnected nodes and edges (graph traversal), especially when dealing with large or highly complex graphs. So, these systems get slower as the data size and complexity increase compared to vector-based RAG systems.

Scalability: RAG systems using large graphs need more sophisticated algorithms and longer processing times to search for specific relationships, thus increasing computational resource requirements and, in turn, costs.

Complexity: Querying and retrieval require effective navigation of the Nodes and Edges in the Knowledge Graph. It involves traversing the graph, following relationship paths, and performing algorithms like depth-first search, breadth-first search, or shortest-path to extract the needed information, mandating graph theory knowledge.

Combining Vector and Graph Databases in RAG Systems: A Hybrid Approach for Optimal AI

Building RAG systems by combining Vector and Graph databases leverages the benefits of both for complex Gen AI applications requiring data similarity and relationship understanding. Vector and Graph databases can be combined and utilized in RAG system in many ways. Depending on your intended use cases, you could design the RAG system’s workflow to focus more on relationship-based or similarity-based retrieval or both.

The above image shows how the RAG system retrieves information from both the Vector and Graph databases. It uses vector embeddings for content-based similarity and graph structures for additional context or relationships to provide relevant, context-rich information to the LLM.

Let’s understand this approach with a real-world example of a Customer Support Knowledge Base used in the technology industry. Suppose, a tech company providing enterprise software uses an extensive knowledge base to help its support agents respond to customer queries. As business volumes increase and handling numerous support tickets, emails, and chat queries requires automation, they implement RAG system for effective information retrieval.

The Graph database stores products, services, error codes, solutions, support agents, and customers as ‘Nodes’, and relationships like “solves”, “belongs to”, “troubleshoots”, etc., as ‘Edges’. Whereas, the Vector database manages information from product guides, other internal documents, support tickets, etc., in the form of embeddings.

After receiving a question about an ‘error code’, the RAG system first retrieves a set of semantically relevant documents like related articles from the Vector database. It then augments the retrieved content by querying the Graph database to obtain more context. So, it also provides solutions involving components and products based on their relationships in the graph. Finally, the LLM takes the related articles and the enriched context including solutions to generate a response.

The Benefits of Hybrid RAG Systems with Vector and Graph Databases

The hybrid approach of leveraging both Vector and Graph databases in the RAG system provides the following advantages:

Highly Relevant Information Retrieval: With both similar data points and relationships between information taken care of, this approach results in the retrieval of accurate, contextually rich information.

Improved Customer Experience: Delivery of useful responses and timely solutions to their queries improves customer satisfaction.

Scalability: This approach is useful for rich knowledge representation in large organizations having vast amounts of data.

Versatile Use Cases: It can be applied wherever there is a need for a comprehensive understanding of data similarities and relationships; be it in healthcare, finance, or e-commerce

Challenges and Considerations for Hybrid RAG Systems Using Vector and Graph Databases

Touted as the future of RAG, even this hybrid approach of using both Vector and Graph databases introduces its unique challenges:

Increased Complexity: Two databases in one system increase the level of complexity in the architecture and querying process. Maintenance and optimization require specialized knowledge.

Higher Storage and Computational Cost: With two databases, both storage and computational costs for querying them increase

Integration: An effective integration layer must be designed to integrate two databases that have fundamentally different ways of storing and querying data. Care must be taken to ensure the high performance of the RAG system.

Latency: While vector-based RAG provides high processing speed, adding a Knowledge Graph reduces it. Depending on the volume of knowledge graph data, the system may not be suitable for real-time responses.

Key Takeaways

We’ve learned how the RAG system is the backbone of your LLM-based Gen AI system, and how its accuracy in retrieving relevant information impacts the quality of your LLM’s responses and ultimately your AI system. In this, we’ve understood the following:

Vector-based RAG System: Though it provides speed and scalability for use cases requiring similarity-based data, it lags in understanding information relationships.

Knowledge Graph-based RAG System: While it provides contextually rich information, it reduces the speed and scalability of the RAG system.

Combining Vector and Graph databases in RAG: It provides superior and accurate retrieved data and serves advanced use cases but increases complexity and costs.

So, which is the best approach for your RAG system? It depends on your use cases and available resources.

At TenUp, our skilled and experienced AI development team excels in providing personalized recommendations and building customized solutions. Please read our case study in the recruitment industry, where we developed RAG-based Chatbot solution to optimize candidate search. You can also explore our comprehensive AI Engineering Services for more details.

Need High-performing Gen AI Solutions?

We build and integrate custom Gen AI systems into your existing infrastructure while solving your technology challenges and meeting your unique needs.