Understanding the Training AI Model from Scratch Approach

Sometimes also referred to as Full Training, this is a traditional approach to training AI models. It begins with developing a new model and providing end-to-end training using large datasets to ensure it delivers the intended outcomes. The training starts by utilizing random or predefined initial weights. They are later optimized iteratively based on the input data and the output results, and by using relevant algorithms and optimization techniques.

Key components of the Training AI Model from Scratch approach include:

Data: As the model is being trained from scratch, using large datasets is essential. The model will likely suffer overfitting issues and perform poorly if trained on insufficient data.

Computational Power: This requirement is high because the model must train on all the parameters (sometimes running up to millions). It necessitates the use of very high-performance GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units)

Time Frame: Considering the high training data volume and process complexity, using this approach requires more time compared to other methods like transfer learning and fine-tuning.

Costs: The costs for training AI models using this method are high because of its extensive requirement for both human and computational resources, and a longer time frame.

Flexibility: You get complete control of the model's architecture design, which you can modify to meet specific use cases. This approach also provides full flexibility in the training process to make necessary adjustments according to available data.

Performance: The model’s performance depends on its architecture, the size of training datasets, and the level of optimization.

If you’ve ever wondered “how to train an AI model”, this approach represents the most resource-intensive but flexible option, especially when considering the importance of training AI models from scratch with proper data preprocessing and feature engineering. It is best when data is abundant, and the project demands a custom AI model training strategy.

When to use the ‘Training from Scratch’ approach?

Even though this approach is resource, time and cost-intensive, it is best suited in the following scenarios:

Unique Issues: Use this approach if you are working on a solution to resolve a highly specialized problem for which no pre-trained model is good enough.

Custom Architecture: If the solution demands that you customize the architecture design to attain desired outcomes, go for this approach.

Availability of large datasets: As discussed earlier, this approach cannot be used if the data is not sufficient for training. So, if the required amount of data is available and your project requires complete flexibility, use this approach.

Let’s get a better understanding with a hypothetical example. Suppose, you want to build an AI solution to detect very rare cancer from medical imaging. Several pre-trained models capable of analyzing medical imaging are available. But the visual characteristics of this cancer are so unique that none of the pre-trained models are trained on the specialized features needed to identify them. This necessitates full training of a model on those particular features. Also, if the use case is such that the model architecture of existing pre-trained models is not suitable, you need to build a custom model and train it from scratch.

While using this traditional approach of ‘Training from Scratch’ is suitable in certain scenarios, it is clearly not feasible when you have resource, cost, and time constraints. Thanks to the rise of pre-trained models like Claude, YOLO, SpeechBrain, etc., you can utilize modern approaches to training AI models, like Transfer Learning and Fine-tuning, for your AI development projects.

Want to build a unique AI solution from the ground up?

Our experienced AI development team will create a custom architecture and training the AI model to deliver accurate outcomes.

Transfer Learning: The Approach of Using Pre-trained AI Models for Training

This AI model training approach utilizes a model pre-trained on large datasets to perform certain task/s and repurposes it to perform new yet related task/s. Suppose, you have a pre-trained Object Detection Model trained on general images like animals, plants, vehicles, etc., and you want to use it to detect food items. This model has already learned to recognize objects based on features like shapes, textures, edges, colors, etc., which are common in food items as well. Using the Transfer Learning approach, you can leverage this model to apply its ability to recognize objects in the new domain of food item detection. This is where the distinction of transfer learning vs pre-training comes in. While pre-training refers to the initial training of large models, transfer learning adapts those models for new domains.

This approach leverages transfer learning models that are already trained on large datasets. Instead of training everything again, you adapt knowledge from the pre-trained model to solve a related task. This method falls under the broader area of transfer learning and deep learning.

So, the purpose of Transfer Learning is to utilize the knowledge learned by the model on one task to perform another task, even if the two tasks are not the same. This approach is especially useful when you have limited training data for the new task as it leverages the model's already learned features.

Let’s understand how Transfer Learning works using the above example of food item detection:

- Pre-trained Model: The process starts with selecting your pre-trained Object Detection Model trained on all the features needed to recognize food items.

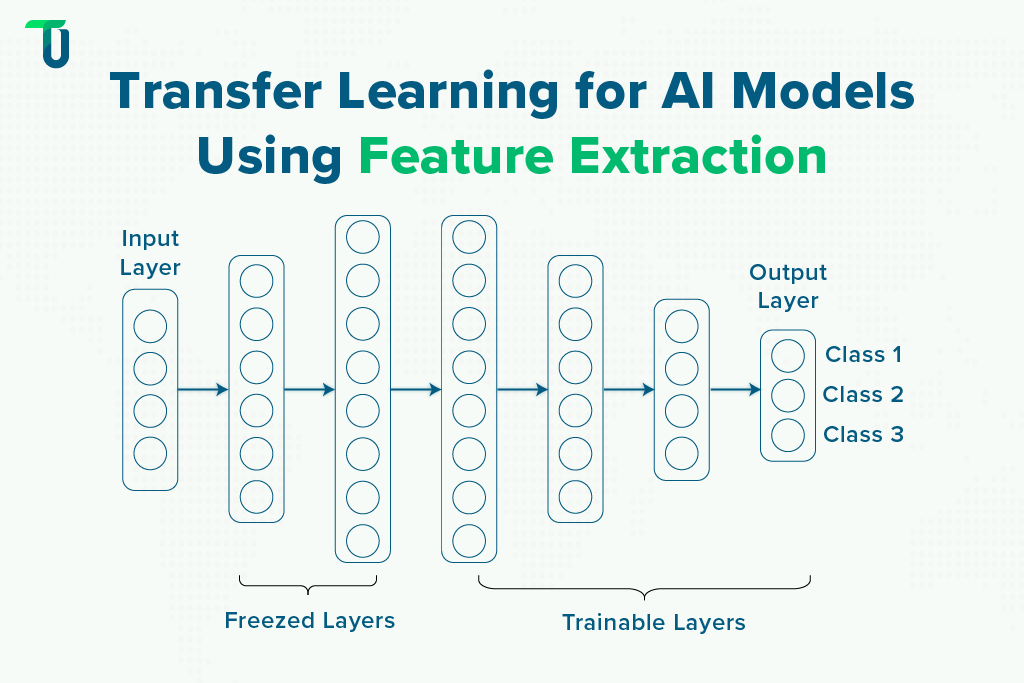

- Feature Extraction: It involves using the pre-trained model as a feature extractor and freezing the initial layers of the model having features necessary for object detection like shapes, textures, etc. So, you are transferring the required features to the new task.

- Limited Training: It includes training the final few layers of the model on a smaller dataset of food images and adjusting its output to focus on food categories using fewer computational resources.

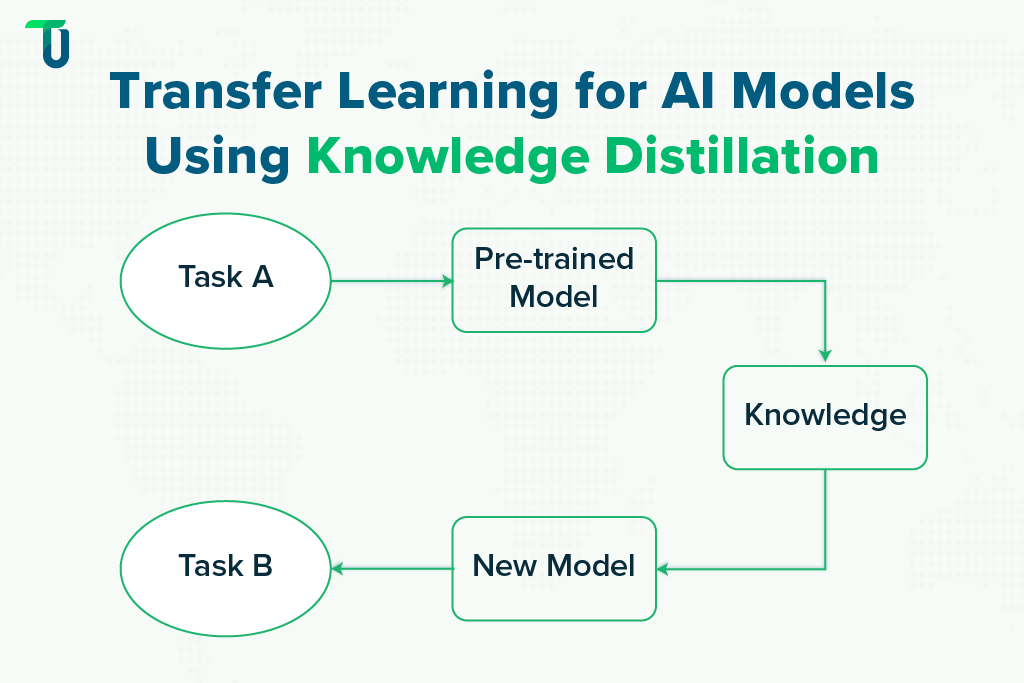

Another method to perform Transfer Learning is Knowledge Distillation.

Knowledge Distillation is one of the widely used transfer learning techniques, where the required knowledge is transferred from the pre-trained Object Detection Model (the large teacher model) to the new model (the small student model). Here knowledge includes the model’s parameters, learned patterns, and the ability to perform certain tasks (object detection). So, the student model mimics the behavior of the teacher model to perform the new task- in our example, food item detection.

The Knowledge Distillation technique is particularly useful when you need to keep the model size small while attaining the same level of performance for devices with limited resources. This is why many businesses compare transfer learning vs learning from scratch before starting projects.

When to use the ‘Transfer Learning’ approach for training AI models?

Limited Data: When you have a small training dataset for the new task and the pre-trained model is trained on a large yet relevant dataset and has features required for your new task.

Similar Tasks: Choose this approach when your new task is similar to the task your pre-trained model is proficient at performing.

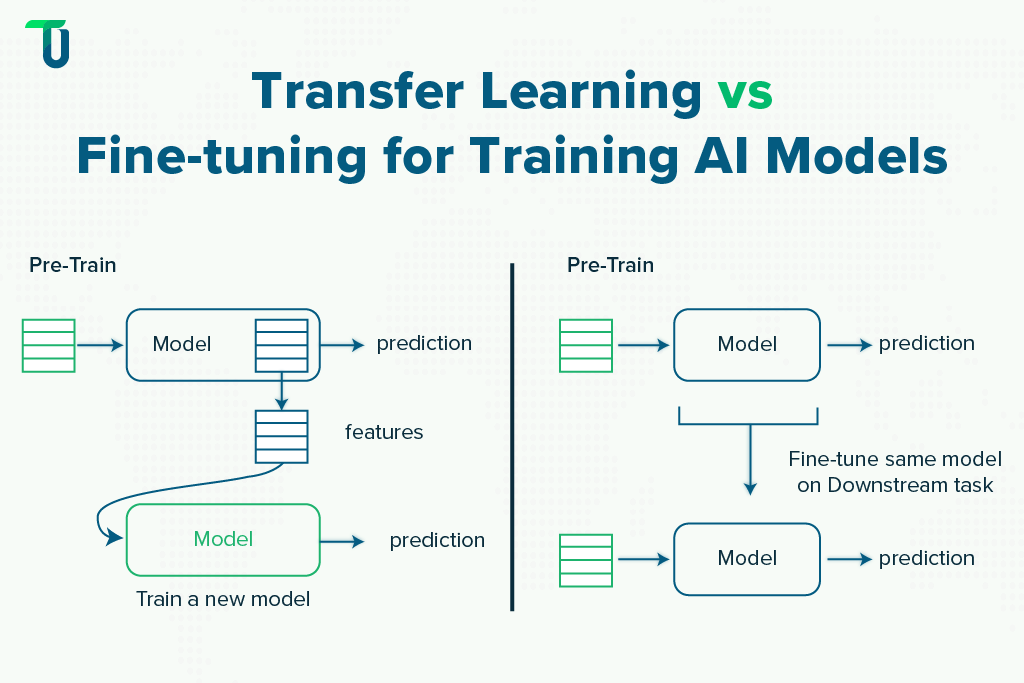

Training from Scratch vs Transfer Learning

‘Transfer Learning’ saves time and costs as compared to ‘Training from Scratch’ and accomplishes the task of training AI models with limited resources. As training from scratch using a small dataset leads to overfitting issues, using ‘Transfer Learning’ significantly reduces these challenges by leveraging a model pre-trained on large datasets. The downside of ‘Transfer Learning’ is that it can be used only for similar tasks and provides limited flexibility. This comparison often sparks debate online about transfer learning vs fine-tuning or even model training vs fine tuning. The general consensus is that transfer learning is cost-effective, while fine-tuning offers higher accuracy.

Need An Entry-level AI Solution on a Budget?

Our team can build custom AI solutions for your business while meeting your cost and time requirements.

Fine-tuning AI Models: A Step Beyond Transfer Learning

Fine-tuning pre-trained models takes a step ahead of Transfer Learning in training AI models. It also involves leveraging a pre-trained model trained on large, generic datasets. But instead of freezing the initial layers and adjusting only the final ones (as in model Transfer Learning), the Fine-tuning approach allows unfreezing some of the initial layers as well and adjusting them along with the final layers to achieve performance accuracy in niche tasks.

In some cases, all the weights (parameters) of the pre-trained model are adjusted, giving more flexibility to align the model with the new task (called Full Fine-tuning). Parameter Efficient Fine-tuning (PEFT) is a modern and more feasible approach. Businesses adopting parameter efficient fine-tuning techniques to train open-source models can achieve cost savings while still deploying a high-performing fine-tuned model. If you’re wondering, what is parameter efficient fine tuning, read our dedicated blog discussing the concept of Fine-tuning pre-trained models in great detail with two PEFT techniques- LoRA and QLoRA.

Transfer Learning vs Fine-Tuning

As the number of layers being trained in ‘Fine-tuning’ increases as compared to ‘Transfer Learning’, it utilizes moderate datasets and more computation resources. Depending on the level of Fine-tuning undertaken i.e. the number of parameters adjusted, the time and costs in this process increase. The benefit of using ‘Fine-tuning’ over ‘Transfer Learning’ is that even though the pre-trained model is chosen for similarities in the original and new tasks, it allows for a greater level of accuracy and performance optimization for new tasks. So, you get more flexibility in aligning the pre-trained model to your specialized, niche tasks. Some researchers even compare Transfer learning vs fine-tuning (retrieval-augmented generation vs tuning the model). RAG helps when continuous updates are needed, while fine-tuning is better for stable, specialized performance.

Factors to Consider While Selecting Your AI Model Training Approach

We’ve discussed all three AI model training approaches, but which is right for your particular AI development project or to build your unique AI-based solution? You need to select the most suitable approach based on the availability of resources, costs, time, and the nature of your intended AI-based solution. Let’s have a quick comparison of these approaches based on your consideration factors:

Model Utilization

- Training From Scratch: A new model is given end-to-end training.

- Transfer Learning: A pre-trained model is repurposed for a new task.

- Fine-tuning: A pre-trained model is trained further to improve performance on a new task.

Data Requirements

- Training From Scratch: Large datasets

- Transfer Learning: Smaller datasets

- Fine-tuning: Moderate datasets

Computational Resources

- Training From Scratch: Maximum resource requirement

- Transfer Learning: Minimum resource requirement

- Fine-tuning: More than Transfer learning but much lesser than Training from Scratch

Time to Train

- Training From Scratch: Long training time needed

- Transfer Learning: Short time required

- Fine-tuning: Shorter than Training from Scratch but longer than Transfer Learning

Flexibility in Training and Architecture

- Training From Scratch: Complete Flexibility

- Transfer Learning: Limited Flexibility

- Fine-tuning: High Flexibility

Expected Performance

- Training From Scratch: High performance on intended tasks

- Transfer Learning: Good enough performance on similar tasks

- Fine-tuning: High performance on specialized, niche tasks. In fact, when companies need parameter-efficient fine-tuning or RAG-based methods, fine-tuning delivers long-term value compared to retraining models.

| Training from Scratch | Transfer Learning | Fine-tuning | |

|---|---|---|---|

| Model Used | New | Pre-trained Model | Pre-trained Model |

| Datasets Utilized | Large | Small | Moderate |

| Computing Power | High | Low | Moderate |

| Time to Train | Long | Short | Moderate |

| Flexibility | Full | Low | High |

| Performance | High | Good | High |

Leverage AI Development Expertise to Get High Performance Models

It’s not only about utilizing the correct technologies and tools, even the approach your AI development team adopts to train your models impacts your solution’s performance along with the cost and time needed. Even if you are building an entry-level AI application to perform one task, or a comprehensive AI-based system to automate several business processes, strategies and approaches in development are critical. This is where understanding methods like Transfer learning vs fine-tuning and leveraging the right model training solution—supported by strong foundations in data preprocessing and feature engineering—ensures success.

Our comprehensive AI Engineering Services focus on analyzing the client’s requirements, creating a strategy to build a custom solution, and adopting the right approaches to turn this plan into a reality. Please check out our success stories to see how we have developed AI solutions that deliver tangible results.

Want to Develop an AI System to Enhance Business Processes?

Don’t just build an AI system. We develop customized solutions that provide tangible results by automating your business processes.

Frequently asked questions

What is the difference between Training an AI model from Scratch, Transfer Learning, and Fine-tuning?

Training from Scratch builds a model from the ground up using large datasets. Transfer Learning adapts a pre-trained model for a related task by freezing some layers. Fine-tuning refines a pre-trained model for better accuracy by unfreezing & adjusting more layers.

Is Transfer Learning Different from Deep Learning?

Transfer Learning is a technique within Deep Learning. While Deep Learning trains models from scratch, Transfer Learning repurposes pre-trained models for new tasks, saving time and resources. It’s a method that makes Deep Learning more efficient.

What are the advantages of Transfer Learning over Training from Scratch?

Transfer Learning saves time, resources, and costs as compared to Training from Scratch. By leveraging pre-trained models, it becomes ideal for tasks with limited data or when the new task is similar to the original.

How does Fine-tuning differ from Transfer Learning?

Fine-tuning adjusts more (or all) layers of a pre-trained model for better task alignment, offering more flexibility & accuracy. Transfer Learning freezes most layers & trains only the final ones. Fine-tuning needs more resources but improves performance for specialized tasks.

What are the key factors to consider when choosing an AI model training approach?

Key factors include:

- Availability of data (large, small, or moderate)

- Computational resources and budget

- Time constraints

- Similarity between the new task and the pre-trained model’s original task

- Need for flexibility in model architecture

Can I use Transfer Learning for tasks unrelated to the pre-trained model’s original task?

Transfer Learning works best when the new task is related to the original task. If the tasks are unrelated, the pre-trained model’s features may not be useful, and Training from Scratch or Fine-tuning might be more effective.

What are the risks of training an AI model from Scratch?

Risks include:

- High computational and financial costs

- Longer training times

- Overfitting if the dataset is insufficient

- Poor performance if the model architecture or optimization techniques are not well-designed

Which AI model training approach is best for small businesses with limited resources?

Transfer learning is often the best choice for small businesses due to its lower resource requirements, faster implementation, and ability to deliver good performance with limited data. Fine-tuning can be considered if higher accuracy is needed for specialized tasks.